|

Thinking in Pictures / Imagery-Based AI

This project was inspired by the book Thinking in Pictures by Temple Grandin, a professor of animal science who is also on the autism spectrum and who feels that she is a visual thinker. The goal of this project is better understand how visual thinkers process information and experience the world around them. This project involves building and studying visual-imagery-based AI systems, and also developing new assessments to measure visual thinking in people. |

Selected publications

- [PNAS] Kunda, M. (2020). AI, visual imagery, and a case study on the challenges posed by human intelligence tests. Proceedings of the National Academy of Sciences, 117 (47), 29390-29397. [pdf]

- ★ Best Paper Award ★ Yang, Y., McGreggor, K., and Kunda, M. (2020). Not quite any way you slice it: How different analogical constructions affect Raven’s Matrices performance. Eighth Annual Conference on Advances in Cognitive Systems (ACS). Winner of the inaugural ACS Patrick Henry Winston Award for Best Student Paper. [pdf]

- [Cortex] Kunda, M. (2018). Visual mental imagery: A view from artificial intelligence. Cortex, 105, 155-172. [pdf]

- Warford, N., and Kunda, M. (2018). Measuring individual differences in visual and verbal thinking styles. 40th Annual Meeting of the Cognitive Science Society, Madison, WI. [pdf]

- [Intelligence] Kunda, M., Soulières, I., Rozga, A., & Goel, A. K. (2016). Error patterns on the Raven’s Standard Progressive Matrices Test. Intelligence, 59, 181-198. [pdf]

- [JADD] Kunda, M., and Goel, A. K. (2011). Thinking in Pictures as a cognitive account of autism. Journal of Autism and Developmental Disorders, 41 (9), pp. 1157-1177. [pdf]

|

|

Film Detective: A Game to Help Kids Learn Social and Theory of Mind Reasoning Skills

In this project, we are working with collaborators in Vanderbilt’s Open-Ended Learning Environments group and at the Vanderbilt Kennedy Center’s Treatment and Research Institute for Autism Spectrum Disorders (TRIAD) to develop new, visually-oriented, technology-based approaches for teaching theory of mind and social skills to adolescents on the autism spectrum. See our Film Detective website here. |

Selected publications

- [JADD] Rashedi, R., Bonnet, K., Schulte, R., Schlundt, D., Swanson, A., Kinsman, A., Bardett, N., Warren, Z., Juarez, P., Biswas, G., & Kunda, M. (2021). Opportunities and challenges in developing technology-based social skills interventions for adolescents with autism spectrum disorder: A qualitative analysis of parent perspectives. Journal of Autism and Developmental Disorders. [pdf]

- Chen Z., Li S., Rashedi R., Zi X., Elrod-Erickson M, Hollis B., Maliakal A., Shen X., Zhao S., & Kunda M. (2020). Creating and characterizing datasets for social visual question answering. IEEE Joint International Conference on Development and Learning and Epigenetic Robotics (ICDL/EPIROB). [pdf]

- Zi, X., Li, S., Rashedi, R., Rushdy, M., Lane, B., Mishra, S., Biswas, G., Swanson, A., Kinsman, A., Bardett, N., Warren, Z., Juarez, P., and Kunda, M. (2020). Science learning and social reasoning in adolescents on the autism spectrum: An educational technology usability study. Proceedings of the 42nd Annual Meeting of the Cognitive Science Society. [pdf]

|

|

Attention and Wearable Cameras

Visual attention impacts virtually every aspect of intelligent behavior in humans, from perception and learning to communication and social interaction. Recent advances in wearable technology now enable us to measure human visual attention in real-world settings. This project leverages wearable camera and eye-tracking technologies to support research into the relationships between visual attention, learning, and intelligent problem-solving. |

Selected publications

- Brown, E., Park, S., Warford, N., Seiffert, A., Kawamura, K., Lappin, J., and Kunda, M. (2018). An architecture for spatiotemporal template-based search. Advances in Cognitive Systems, 6, 101-118.

- Kunda, M., El-Banani, M., and Rehg, J. (2016). A computational exploration of problem-solving strategies and gaze behaviors on the Block Design task. In Proceedings of the 38th Annual Meeting of the Cognitive Science Society, Philadelphia, PA.

- Kunda, M., and Ting, J. (2016). Looking around the mind’s eye: Attention-based access to visual search templates in working memory. Advances in Cognitive Systems, 4, 113–129.

|

|

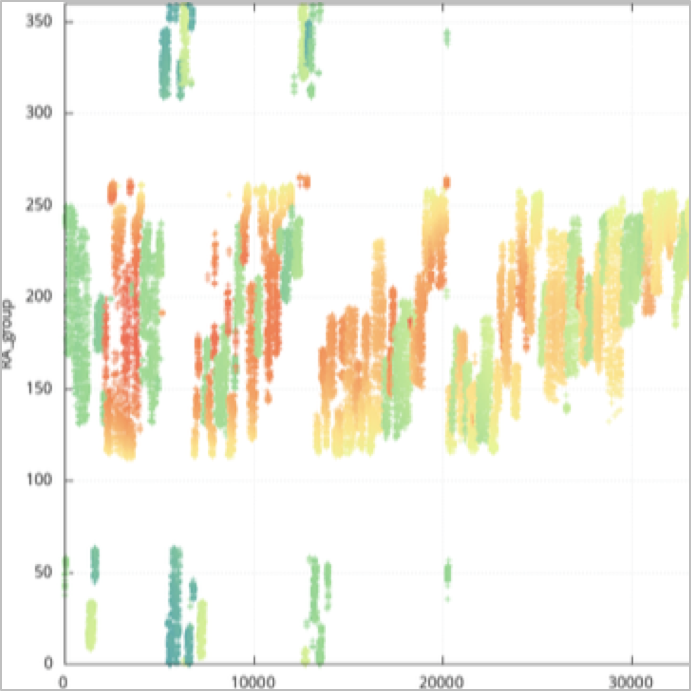

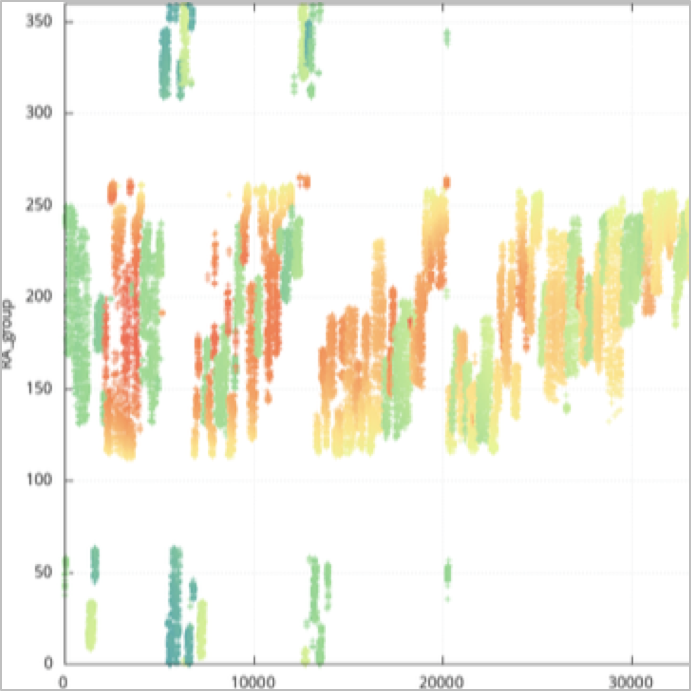

Data Visualization

The goal of this project is to understand visual cognition in the context of human data visualization activities, including studying and modeling the roles of visual perception (what you see), semantic knowledge (what you know), and goals (what you are trying to do). These models will help to identify factors that contribute to human performance on data visualization tasks and will also lay foundations for developing new intelligent data visualization technologies. |

Selected publications

- Eilbert, J., Peters, Z., Eliott, F., Stassun, K., and Kunda, M. (2018). Shapes in scatterplots: Comparing human visual impressions and computational metrics. 40th Annual Meeting of the Cognitive Science Society, Madison, WI.

- Eliott, F., Stassun, K., and Kunda, M. (2018). IACI: A human-inspired computational architecture to help us understand visual data exploration. Sixth Annual Conference on Advances in Cognitive Systems, Menlo Park, CA.

- Eliott, F. M., Stassun, K., and Kunda, M. (2017). Visual data exploration: How expert astronomers use flipbook-style visual approaches to understand new data. In Proceedings of the 39th Annual Meeting of the Cognitive Science Society, London, UK.

|

|

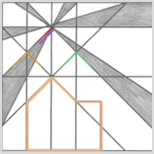

AI in Cognitive Assessments

The goal of this project is to develop new AI tools that improve the usefulness of standardized cognitive assessments that are used in research and clinical practice. We focus mostly on nonverbal cognitive assessments, such as Raven’s Progressive Matrices, Leiter, Embedded Figures, and Block Design, and we examine how AI models can be used to make more detailed inferences about human response patterns. |

Selected publications

- Palmer, J. H., and Kunda, M. (2018). Thinking in PolAR pictures: Using rotation-friendly mental images to solve Leiter-R Form Completion. AAAI National Conference.

- Ainooson, J., and Kunda, M. (2017). A computational model for reasoning about the Paper Folding task using visual mental images. In Proceedings of the 39th Annual Meeting of the Cognitive Science Society, London, UK.

- Kunda, M., Soulières, I., Rozga, A., & Goel, A. K. (2016). Error patterns on the Raven’s Standard Progressive Matrices Test. Intelligence, 59, 181-198.

- Kunda, M., McGreggor, K., and Goel, A. K. (2013). A computational model for solving problems from the Raven’s Progressive Matrices intelligence test using iconic visual representations. Cognitive Systems Research, 22-23, pp. 47-66.

|

|

Developmentally Inspired AI

Some biologically-inspired approaches to AI aim to emulate the neural structure of the brain. This project takes a parallel approach of looking at developmental aspects of human intelligence. In particular, we study how the physical environment, the maturation of motor and attentional skills, and interactions with social actors all play role in defining what, and how, human infants learn about the world. |

Selected publications

- Wang, X., Wang, X., and Kunda, M. (2018). Ordering of training inputs for a neural network learner. Sixth Annual Conference on Advances in Cognitive Systems, Menlo Park, CA.

- Wang, X., Eliott, F., Ainooson, J., Palmer, J., and Kunda, M. (2017). An object is worth six thousand pictures: The egocentric, manual, multi-image (EMMI) dataset. In International Conference on Computer Vision Workshop on Egocentric Perception, Interaction, and Computing (EPIC@ICCV), Venice, Italy.

|