I am an educational technology and learning science researcher with the Open-Ended Learning Environment research group at the Institute for Software Integrated Systems, Vanderbilt University. My research interests lie in learning science, educational technology, computing education research, collaborative learning and development of technology-enhanced learning environments. Specifically, I am interested in using blends of exploratory qualitative research methodology with learning analytics to inquire into:

- How experts and novices perform complex thinking processes during decision making and problem-solving?

- How to scaffold students in acquiring these thinking skills in intelligent technology-enhanced learning environments?

- How does collaborative problem-solving can help in acquiring these process skills?

I am pursuing my postdoctoral research under the guidance of Prof. Gautam Biswas, Dept. of Electrical Engineering and Computer Science and Engineering, Vanderbilt University.

CURRENT PROJECTS

Representative Postdoctoral Project – 1

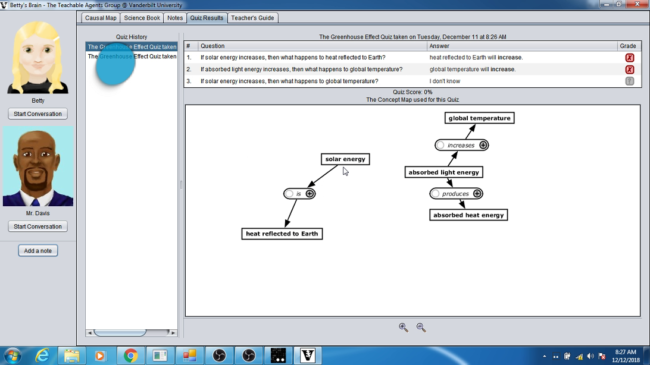

Betty’s Brain: Study Learner’s problem-solving Strategies in a TEL environment using Eye Tracking and Behavioral Data.

Researchers have highlighted how tracking learners’ gaze in technology-enhanced learning (TEL) environments can reveal their learning and problem-solving behaviors and provide a framework for developing personalized scaffolds to foster strategic learning. In this project, I use eye-gaze data and action logs from middle school students to study their cognitive strategies while they perform ill-structured problem-solving in Betty’s Brain, an agent-based open-ended science learning environment. I employ computational techniques such as sequence mining on the action logs to extract differentially frequent behavioral patterns among different levels of performers and then zoom into their cognitive strategies using their gaze patterns. The findings from this research will inform the designs of cognitive and affective scaffolds in Betty’s Brain.

Representative Postdoctoral Project – 2

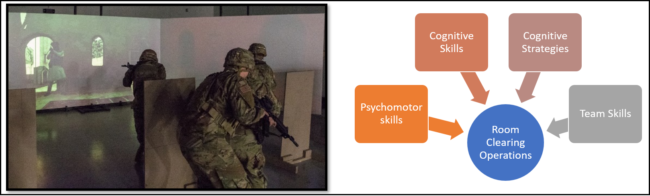

Learner Modeling of Cognitive and Psychomotor Processes for Dismounted Battle Drills.

Operations such as “Enter and Clear a Room” and “React to Direct Fire Contact” are essential dismounted battle drills (DBD) for urban warfare conducted by the armed forces. These operations require the soldiers to develop effective psychomotor and cognitive skills and cognitive strategies along with the ability to work in teams. For example, soldiers are required to identify and differentiate enemy combatants from noncombatants inside the threat area, and at the same time, they have to provide cover for the other team members. This project aims at developing intelligent tutors that support team training for DBDs in virtual and augmented reality environments. Our focus is on how to evaluate psychomotor, cognitive, strategic, and affective processes using multiple monitoring modalities, such as computer logs, video analysis, eye tracking, and physiological sensors. In particular, the project proposes several performance and effectiveness measures and metrics for the Squad Advanced Marksmanship Trainer (SAM-T), a virtual battle drill practice environment for soldiers.

Representative Postdoctoral Project – 3

Affective proxies for learners’ gaze behavior

Multimodal data provides opportunities to study the learner-behavior and states in a richer manner than before. However, the current studies have a common limitation of not being able to scale up the implications since the apparatus used is not scalable. In this project, we attempt to identify measurements from modalities such as facial data that have the capacity to be scaled up; and examine which measures they correspond closely from the modes that have richer and more granular data such as eye-tracking. In other words, we aim at identifying pervasive-ubiquitous proxies to the measurements that have been reported to be obtrusive and detrimental for the ecological validity of the studies/experiments. We are exemplifying these approaches using eye-tracking and facial data from different studies. Recently, we found our first set of facial proxies to gaze-based measurements. The findings suggest that there exists a close connection between the facial-features and the gaze-variables.