Maithilee Kunda has been promoted to Associate Professor of Computer Science with tenure at Vanderbilt. (Yay!)

Category Archives: News

James Ainooson ties for 4th place on the global ARCathon 2022 challenge!!!

James Ainooson has been studying methods for search-based program synthesis using his new Visual Imagery Reasoning Language (VIMRL). His work (with assists from our other PhD students Ryan Yang, Deepayan Sanyal, and Joel Michelson) just tied for 4th place on the 2022 ARCathon challenge:

https://lab42.global/past-challenges/arcathon-2022/

Let’s see what happens in the 2023 challenge!

Way to go, James!

Postdoc Caoimhe Stack to present work on theory of mind assessment at ACS 2022

Congrats to our entire theory of mind team for all the great work that led to this paper:

Stack, C., Myers, S., Farhana, E., Roskes, A., Shen, X., Zhao, S., Maliakal, A., Rashedi, R., Michelson, J., and Kunda, M. (2022). Framework for a multi-dimensional test of theory of mind for humans and AI systems. Tenth Annual Conference on Advances in Cognitive Systems.

James Ainooson and Joel Michelson accepted for 2023 AAAI Doctoral Consortium

Congratulations to PhD students James and Joel for being accepted to the 2023 AAAI Doctoral Consortium.

James is doing his dissertation research on:

Modeling strategies as programs: How to study strategy differences in intelligent systems with program synthesis

Joel is doing his dissertation research on:

Theory of mind: a familiar aspect of humanity to give machines

Ryan Yang and Joel Michelson to present papers at 2022 Workshop on Artificial Intelligence & Cognition (AIC)

Congratulations to Ryan and Joel!

8th International Workshop on Artificial Intelligence and Cognition (AIC)

Ryan’s paper:

Yang, Y., Sanyal, D., Michelson, J., Ainooson, J., & Kunda, M. (2022). A conceptual chronicle of solving Raven’s Progressive Matrices computationally. 8th International Workshop on Artificial Intelligence and Cognition (AIC). Örebro, Sweden. Oral presentation.

Joel’s paper:

Michelson, J., Sanyal, D., Ainooson, J., Yang, Y., & Kunda, M. (2022). Experimental design and facets of evidence for computational theory of mind. 8th International Workshop on Artificial Intelligence and Cognition (AIC). Örebro, Sweden. Oral presentation.

Ryan Yang to present work on analogy at CogSci 2022

Ryan’s paper on imagery-based problem solving has been accepted to CogSci 2022. Congratulations, Ryan!

Yang, Y., Sanyal, D., Michelson, J., Ainooson, J., & Kunda, M. (2022). An end-to-end imagery-based modeling of solving geometric analogy problems. 44th Annual Meeting of the Cognitive Science Society. Poster presentation.

Film Detective project featured in New York Times

Our Film Detective project, in which we are creating a new educational video game to help adolescents on the autism spectrum learn theory of mind and social reasoning skills, was featured in a New York Times article on the use of AI in special education.

——————

How Robots Can Assist Students With Disabilities

By Alina Tugend

New tools use artificial intelligence to assist students with autism and dyslexia and address accessibility for those who are blind or deaf.

https://www.nytimes.com/2022/03/29/technology/ai-robots-students-disabilities.html

Maithilee Kunda to speak at Mar. 4 screening of new sci-fi film: After Yang

Part of the Nashville Belcourt Theater’s “Science on Screen” series.

From the Belcourt website:

“Science On Screen | Fri, Mar 4 at 8:00pm: Post-screening discussion on The Inner Lives of AI (and Us) with Maithilee Kunda, assistant professor of computer science at Vanderbilt University and director of Vanderbilt’s Laboratory for Artificial Intelligence and Visual Analogical Systems

When Yang — a lifelike, artificially intelligent android that Jake and Kyra buy as a companion for their adopted daughter — abruptly stops functioning, Jake just wants him repaired quickly and cheaply. But having purchased Yang “certified refurbished” from a now-defunct store, Jake’s led first to a conspiracy theorist technician and then a technology museum curator, who discovers that Yang was actually recording memories. Jake’s quest eventually becomes one of existential introspection and a contemplation of his own life, as it passes him by.

An aesthete at heart, Kogonada only vaguely hints at the futuristic science fiction setting (and accompanying climate catastrophe) — instead crafting a serene, meditative, compassionate story that inverts the trusted theme of robots exploring what it means to be human, by showing a human trying to understand this artificial being who was part of his family. Punctuated with humor and joyousness, AFTER YANG’s quiet power lies in its timely contemplation of how we create meaning and experience loss.”

Two IES blog posts feature Film Detective project and discussion about diversity in science

Film Detective: How an AI-powered game aims to improve outcomes for students with ASD, by Bennett Lunn. Inside IES Research, US Institute of Education Sciences (IES). Nov 3, 2021.

Perspective matters: How diversity of background, expertise, and cognition can lead to good science, by Bennett Lunn. Inside IES Research, US Institute of Education Sciences (IES). Aug 17, 2021.

Paper led by Roxanne Rashedi published in JADD

Excited to have our paper appear in JADD (Journal of Autism and Developmental Disorders)! This work was led by postdoc Roxanne Rashedi. Congratulations to Roxanne and the rest of our amazing team!

Rashedi, R., Bonnet, K., Schulte, R., Schlundt, D., Swanson, A., Kinsman, A., Bardett, N., Warren, Z., Juarez, P., Biswas, G., & Kunda, M. (2021). Opportunities and challenges in developing technology-based social skills interventions for adolescents with autism spectrum disorder: A qualitative analysis of parent perspectives. Journal of Autism and Developmental Disorders. [pdf]

Ryan Yang presents paper on automatic test item generation at ACS 2021

Congratulations to Ryan and the rest of the crew!

Yang, Y., Sanyal, D., Michelson, J., Ainooson, J., & Kunda, M. (2021). Automatic item generation of figural analogy problems: A review and outlook. Proceedings of the Ninth Annual Conference on Advances in Cognitive Systems (ACS). [pdf]

Joel Michelson to present work on new animal-inspired theory of mind challenge

Joel’s paper on a new theory-of-mind challenge problem for artificial agents has been accepted as a long paper plus oral presentation at the 2021 AAAI FSS. Congratulations, Joel!

Michelson, J., Sanyal, D., Ainooson, J., Yang, Y., & Kunda, M. (2021). Social cognition paradigms ex machinas. Proceedings of the AAAI Fall Symposium on Computational Theory of Mind for Human-Machine Teams. [pdf]

Maithilee Kunda gives virtual keynote at Italian CogSci Conference

Maithilee Kunda was invited to give a keynote talk at the First Graduate Conference of the Italian Association for Cognitive Science.

Title: AI, visual imagery, and the many unsolved challenges of human intelligence tests.

James Ainooson passes PhD proposal

Congratulations, James!

Title: Learning Programs for Modeling Strategy Differences in Visuospatial Reasoning

Committee:

Prof. Maithilee Kunda, Chair (VU)

Prof. Armando Solar-Lezama (MIT)

Prof. Bradley Malin (VU)

Prof. Gautam Biswas (VU)

Prof. Tyler Derr (VU)

Paper on block design task analysis accepted for oral presentation at CogSci

Congratulations to Avery Dunn, Alice Qiao, and Maya Johnson—co-first authors on this paper! (22% acceptance rate for oral presentations.)

*Dunn, A., *Qiao, A., *Johnson, M., & Kunda, M. (2021). Measuring more to learn more from the block design test: A literature review. Proceedings of the 43rd Annual Meeting of the Cognitive Science Society. p. 611-617. *Co-first authors. Accepted for oral presentation (22% acceptance). [pdf]

Paper published in Proceedings of the National Academy of Sciences (PNAS)

Maithilee Kunda’s review of imagery-based AI for solving human intelligence tests has been published in PNAS!

Kunda, M. (2020). AI, visual imagery, and a case study on the challenges posed by human intelligence tests. Proceedings of the National Academy of Sciences, 117 (47), 29390-29397. [pdf]

Our research featured on CBS 60 Minutes with Anderson Cooper

Video ▶ “Recruiting for talent on the autism spectrum” on CBS 60 Minutes with Anderson Cooper (Vanderbilt portion begins at 7:40).

Video ▶ A 90-second snippet from our portion of this 60 Minutes piece.

Research behind the demo featured on 60 Minutes, including a more in-depth look at the data from our volunteers Dan Burger and Anderson Cooper.

New NSF EAGER grant for studying visuospatial skills, autism, and employment

PI Maithilee Kunda will be leading this grant, with Vanderbilt collaborators Frank Tong, Gautam Biswas, Tim Vogus, Keivan Stassun, and Jesse Spencer-Smith, and VUMC collaborator Zack Warren.

Title: “NSF2026: EAGER: Collaborative Research: Enhancing Employment for Neurodiverse Individuals through Next-Gen, AI-Enabled Assessments of Visuospatial Cognition”

Ryan Yang receives Best Student Paper award at ACS Conference

Way to go, Ryan! Ryan received the inaugural Patrick Henry Winston Award for Best Student Paper at the 2020 Advances in Cognitive Systems (ACS) conference.

From the award selection committee: “Yuan Yang’s paper on the Raven’s Progressive Matrices provides a clear description of the problem space for visual analogy from the pixel level to the rules that operate over them and the similarity criteria necessary to guide reasoning. From a cognitive systems perspective, this work highlights the importance of combining high-level control with low-level representations to achieve high performance on this challenging cognitive task.”

Yang, Y., McGreggor, K., and Kunda, M. (2020). Not quite any way you slice it: How different analogical constructions affect Raven’s Matrices performance. Eighth Annual Conference on Advances in Cognitive Systems (ACS). Winner of the inaugural ACS Patrick Henry Winston Award for Best Student Paper. [pdf]

Paper on TinySocial dataset (led by Phil Chen) accepted in ICDL/EPIROB

Congratulations to Phil, Shiyao, Roxanne, Morgan, Bryan, Angela, Shelley, and Ellen! Great teamwork on this paper!

Chen Z., Li S., Rashedi R., Zi X., Elrod-Erickson M, Hollis B., Maliakal A., Shen X., Zhao S., & Kunda M. (2020). Creating and characterizing datasets for social visual question answering. IEEE Joint International Conference on Development and Learning and Epigenetic Robotics (ICDL/EPIROB). [pdf]

Four papers accepted to ACS 2020

Congratulations to James, Joel, Ryan, and Patrick!

Ainooson, J., Michelson, J., Sanyal, D., Palmer, J. H., and Kunda, M. (2020). Strategies for visuospatial reasoning: Experiments in sufficiency and diversity.

Michelson, J., Sanyal, D., Ainooson, J., and Kunda, M. (2020). A measure of visuospatial reasoning skills: Painting the big picture.

Yang, Y., McGreggor, K., and Kunda, M. (2020). Not quite any way you slice it: How different analogical constructions affect Raven’s Matrices performance.

Hua, T., and Kunda, M. (2020). Modeling Gestalt visual reasoning on Raven’s Matrices using generative image inpainting techniques.

Two papers accepted at CogSci 2020 (led by Mandy Zi and Sean Cha)

Congratulations to the teams on both of these projects!

Cha, S., Ainooson, J., Chong, E., Soulières, I., Rehg, J., and Kunda, M. (2020). Enhancing cognitive assessment through multimodal sensing: A case study using the block design test. Proceedings of the 42nd Annual Meeting of the Cognitive Science Society. p. 2546- 2552. [pdf]

Zi, X., Li, S., Rashedi, R., Rushdy, M., Lane, B., Mishra, S., Biswas, G., Swanson, A., Kinsman, A., Bardett, N., Warren, Z., Juarez, P., and Kunda, M. (2020). Science learning and social reasoning in adolescents on the autism spectrum: An educational technology usability study. Proceedings of the 42nd Annual Meeting of the Cognitive Science Society. [pdf]

Maithilee Kunda gives conference keynote at ICCM

Keynote title: Imagery-based AI.

17th Annual International Conference on Cognitive Modeling. Montreal, Canada.

Chris Ketchum has poem accepted for publication

Chris Ketchum has had one of his poems accepted for publication in Five Points, a journal of literature and art published by Georgia State University. The poem is titled, “Disordering,” and will appear in the Winter 2019 issue.

Congratulations, Chris!

Roxanne Rashedi has abstract accepted for oral presentation at AME 2019

Our extended abstract, “Reasoning Together: Promoting Mutual Understanding in Technology Design for Individuals with Autism” has been accepted for oral presentation at Association for Moral Education’s 45th annual conference. Authors are Roxanne Rashedi and Maithilee Kunda.

Congratulations, Roxanne!

Maithilee Kunda gives invited talk at NAS Colloquium

Maithilee Kunda gave an invited talk on “Imagery-based AI” at the National Academy of Sciences Sackler Colloquium on, “The Brain Produces Mind by Modeling.”

The colloquium was organized by Richard Shiffrin, Danielle Bassett, Sophie Deneve, Nikolaus Kriegeskorte, and Josh Tenenbaum. Many of the other interesting talks from this colloquium can be found here.

Two papers accepted to CogSci 2019

We have two papers that will be presented at CogSci 2019 this summer in Montreal, Canada:

Technology-Based Cognitive Enrichment for Animals in Zoos: A Case Study and

Lessons Learned. Benjamin J. Scheer, Fidel Cano Renteria, and Maithilee Kunda. (See here for a nice video about Ben’s work!)

AI and Cognitive Testing: A New Conceptual Framework and Roadmap. Maithilee Kunda.

VU spotlight on Ben Scheer’s work with orangutans

Vanderbilt has just done a nice writeup and video about lab member Ben Scheer, highlighting his work on our “orangutan app” for Zoo Atlanta and many other projects. Way to go, Ben!

Read the full story (and see the video) here:

https://news.vanderbilt.edu/2019/02/22/student-creates-app-for-orangutans/

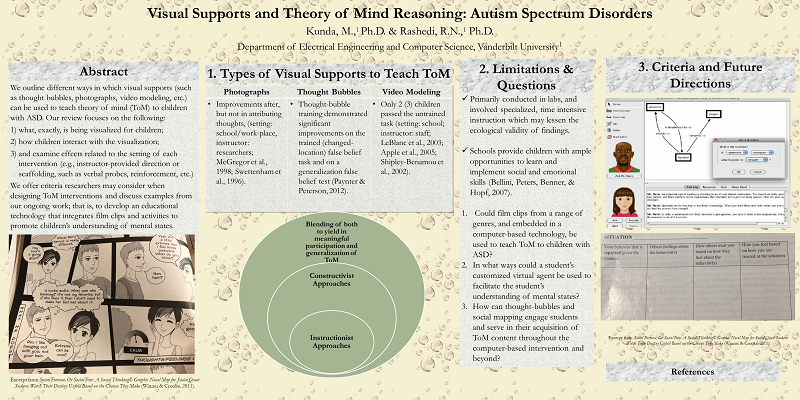

Roxanne Rashedi presents poster at IES PI meeting

Our newest postdoctoral research fellow, Roxanne Rashedi, presented a poster on our new IES-funded project for teaching theory of mind and social skills to adolescents on the autism spectrum, at the IES PI meeting in Washington, DC.

Poster title: Visual Supports and Theory of Mind Reasoning: Autism Spectrum Disorders

Temple Grandin visits our Imagery-Based AI class

We were very excited to host Dr. Temple Grandin at our class in Imagery-Based AI:

Dr. Grandin also gave a keynote at a conference on Envisioning the Future of Human-Technology Partnerships (organized in part by Vanderbilt’s new Frist Center for Autism and Innovation).

In addition, she was invited to speak as part of Vanderbilt’s Chancellor’s lecture series:

https://news.vanderbilt.edu/2018/11/30/grandin-rejects-low-expectations-insists-workforce-critically-needs-people-with-autism-in-vanderbilt-lecture/

Vanderbilt’s new Frist Center for Autism and Innovation

We are excited to be a part of Vanderbilt’s newly created Frist Center for Autism and Innovation:

https://my.vanderbilt.edu/autismandinnovation/

This new center aims to support and develop the neurodiverse talents of individuals with autism, especially in relation to workforce and employment settings, and was made possible by a $10 million endowed gift from Jennifer and Billy Frist. Full news story here:

https://news.vanderbilt.edu/2018/11/08/vanderbilt-university-launches-the-frist-center-for-autism-and-innovation/

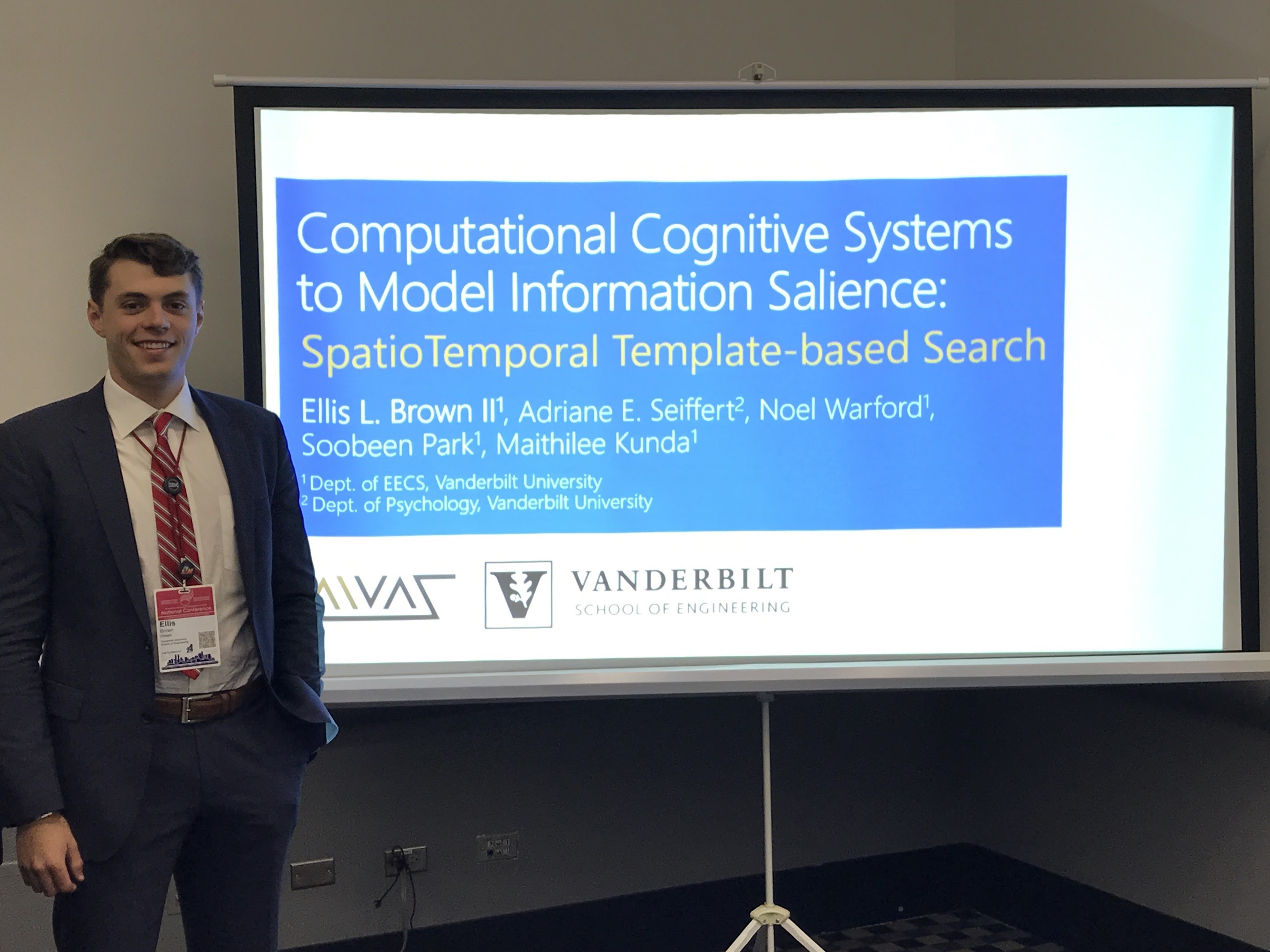

Ellis Brown, Fernanda Eliott, and Xiaotian Wang present work at ACS 2018

We had a strong showing at this year’s Advances in Cognitive Systems conference, which took place at Stanford University. Ellis Brown gave an oral presentation on our work modeling human visual attention and salience for moving targets. Fernanda Eliott presented a poster on IACI, a proposed new cognitive architecture for modeling and studying open ended visual data exploration. And Xiaotian Wang presented a poster on our investigations of how different training regimes affect the performance of neural networks for object recognition.

Full citations below:

Brown, E., Park, S., Warford, N., Seiffert, A., Kawamura, K., Lappin, J., and Kunda, M. (2018). SpatioTemporal Template-based Search: An architecture to model human search for spatiotemporal targets. Sixth Annual Conference on Advances in Cognitive Systems, Menlo Park, CA.

Eliott, F., Stassun, K., and Kunda, M. (2018). IACI: A human-inspired computational architecture to help us understand visual data exploration. Sixth Annual Conference on Advances in Cognitive Systems, Menlo Park, CA.

Wang, X., Wang, X., and Kunda, M. (2018). Ordering of training inputs for a neural network learner. Sixth Annual Conference on Advances in Cognitive Systems, Menlo Park, CA.

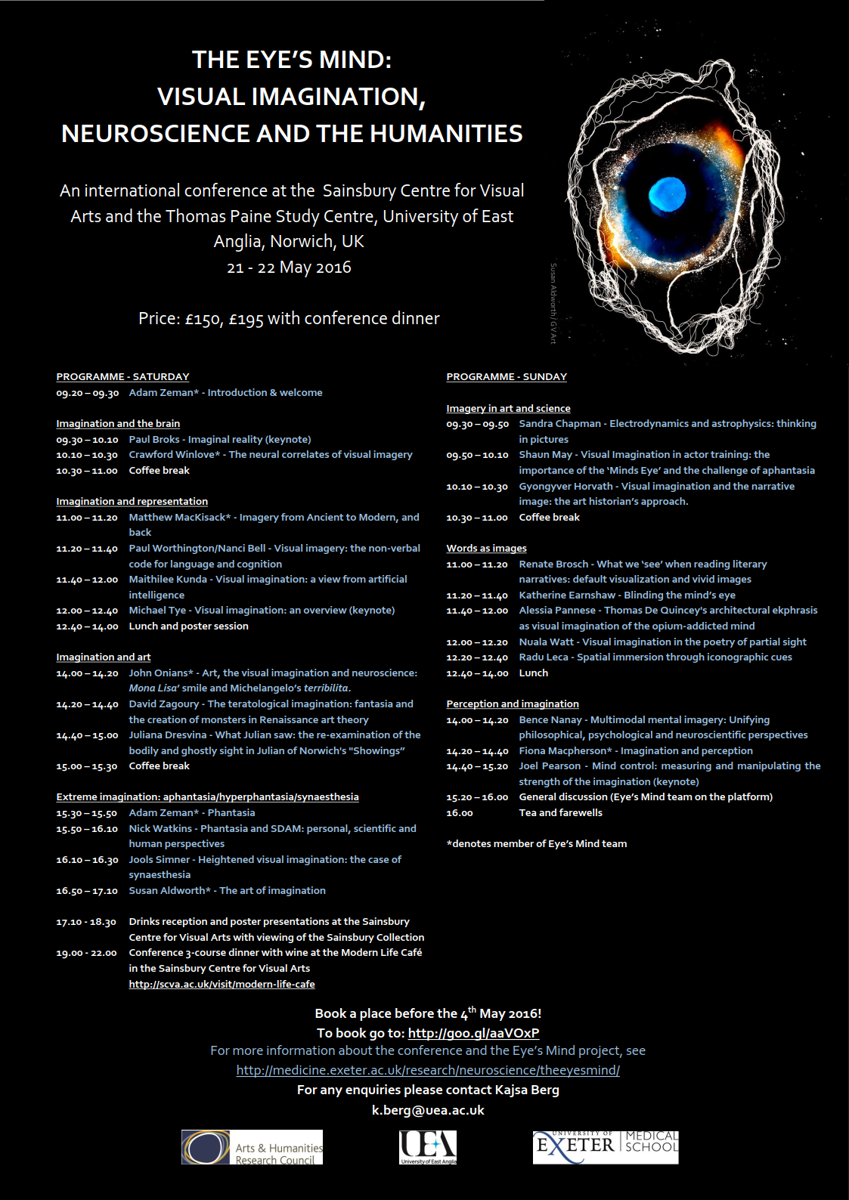

Paper on visual imagery in AI published in journal Cortex

Our paper surveying computational models of visual mental imagery in different areas of AI research (visuospatial reasoning, language understanding, etc.) has just been published in the journal Cortex, as part of a special issue on “The Eye’s Mind – visual imagination, neuroscience and the humanities.”

Kunda, M. (2018). Visual mental imagery: A view from artificial intelligence. Cortex, 105, 155-172. [pdf] [bibtex]

New $1.4M IES grant for teaching social skills to students on the autism spectrum

The lab has received a new $1.4M grant from the Institute of Education Sciences (IES) to develop a new educational technology platform for teaching theory of mind and social skills to middle school students on the autism spectrum.

Maithilee Kunda is the principal investigator. This project builds on an existing educational technology called “Betty’s Brain,” developed by collaborator Gautam Biswas and his Open-Ended Learning Environments (OELE) research group. Other key collaborators include Pablo Juarez, Zack Warren, Amy Kinsman, and Amy Swanson from the Vanderbilt Kennedy Center’s Treatment and Research Institute for Autism Spectrum Disorders.

The official title of the project is: Betty’s Mind: A Theory of Mind and Social Reasoning Intervention for Adolescents with Autism Spectrum Disorders Based on a Learning by Teaching Approach. More info can be found here:

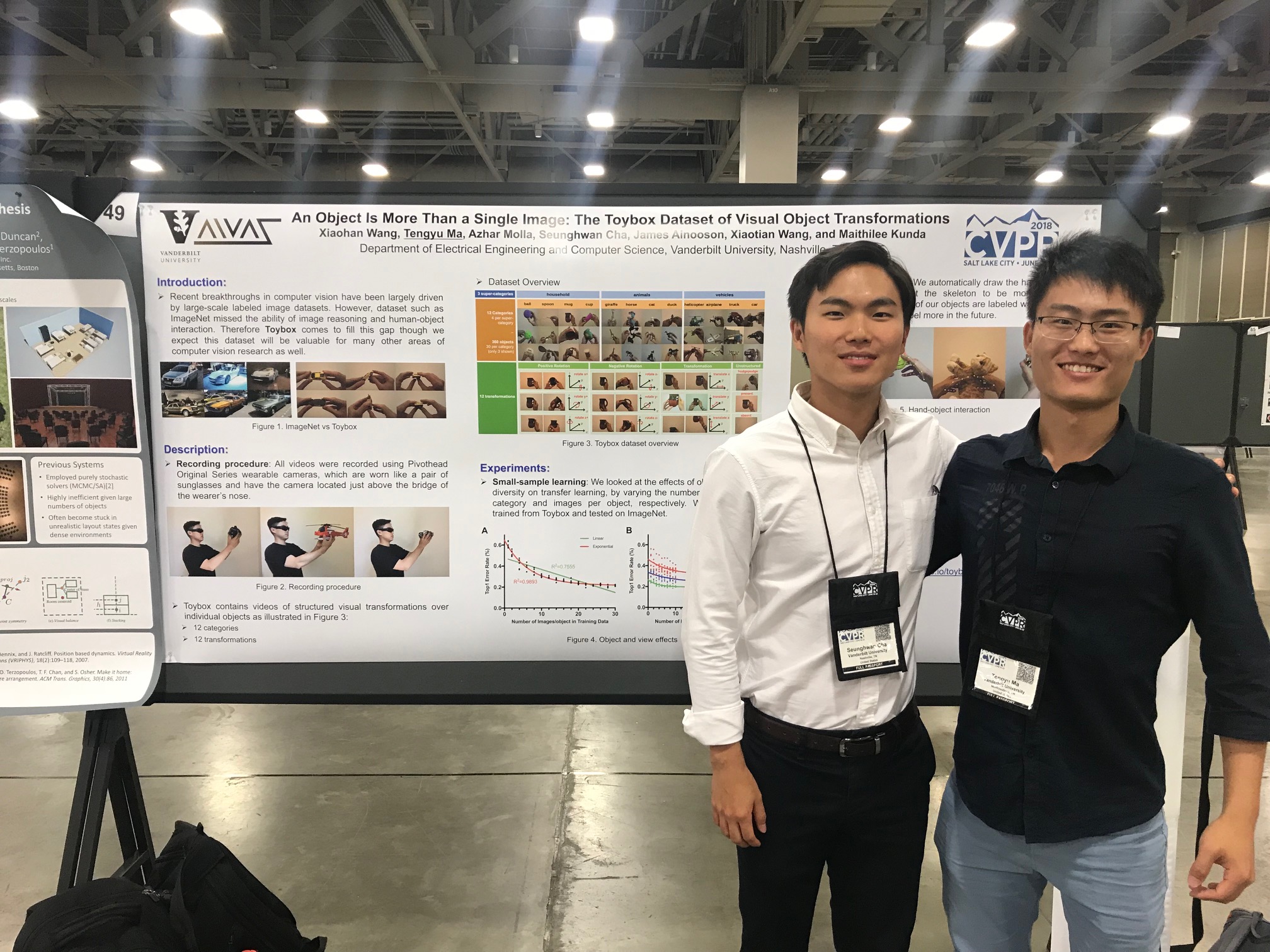

Tengyu Ma and Sean Cha present Toybox at CVPR workshop

Tengyu Ma and Sean Cha presented our newly released Toybox dataset at the 4th CVPR Workshop on Vision Meets Cognition: Functionality, Physics, Intentionality and Causality. The published short paper can be found here.

(Sean, left, and Tengyu, right… Great job!)

Toybox dataset online!

Our Toybox dataset has been posted online:

https://aivaslab.github.io/toybox/

This is the culmination of hundreds of hours of effort from many of our lab members. A tip of the hat to Xiaohan Wang, whose ideas catalyzed this work and who led the dataset effort.

The dataset is described in more detail in this preprint:

https://arxiv.org/abs/1806.06034

Two papers accepted to CogSci 2018

We have two papers accepted to the Annual Meeting of the Cognitive Science Conference:

“Shapes in Scatterplots: Comparing Human Visual Impressions and Computational Metrics,” by Joe Eilbert, Zameese Peters, Fernanda Eliott, Keivan Stassun, and Maithilee Kunda (oral presentation, acceptance rate 31%)

“Measuring Individual Differences in Visual and Verbal Thinking Styles,” by Noel Warford and Maithilee Kunda (poster presentation)

Congratulations to all of the coauthors!

Maithilee Kunda presents lecture at AAAS headquarters

Maithilee Kunda was invited to Washington, DC, to speak for the annual winter lecture of the American Association for the Advancement of Science (AAAS) program of Dialogue on Science, Ethics, and Religion (DoSER). The event was titled, “Of Minds and Machines: What Artificial Intelligence Tells Us About Ourselves,” and Dr. Kunda served as a co-presenter along with Dr. Paul Scherz, assistant professor of moral theology and ethics at the Catholic University of America.

https://www.aaas.org/news/aaas-explores-what-artificial-intelligence-teaches-us-about-ourselves

Ben Scheer creates VR for Nashville musician

Lab member Ben Scheer made a virtual reality “music video” experience for a recent single by Nashville musician Kate Tucker.

See discussion of Ben’s work at the end of this article:

TVD Premiere: Kate Tucker, “In Your Arms” Single and its Virtual Reality Experience

Paper accepted to AAAI-18

Our paper on, “Thinking in PolAR Pictures: Using Rotation-Friendly Mental Images to Solve Leiter-R Form Completion” has been accepted for presentation at the 2018 AAAI conference. The paper is authored by Josh Palmer and Maithilee Kunda. Congratulations, Josh!

EMMI dataset paper presented at EPIC@ICCV

Our first paper on our new dataset, the Egocentric, Manual, Multi-Image (EMMI) dataset was presented by Maithilee Kunda at the Egocentric Perception, Interaction, and Computing (EPIC) workshop at the ICCV computer vision conference in Lido, Italy.

Link to abstract and paper.

Full dataset to be published soon!

Ellis Brown gives talk at AISES 2017 National Conference

Ellis Brown gave an oral presentation about our work on using computational cognitive systems to model human visual information salience at the 2017 national conference of the American Indian Science and Engineering Society (AISES). Congratulations, Ellis!

Full citation: Brown, E. L., II, Seiffert, A. E., Warford, N., Park, S., & Kunda, M. (2017, September 21). Computational Cognitive Systems to Model Information Salience. Oral presentation given at the American Indian Science and Engineering Society National Conference. Denver, CO.

Maithilee Kunda serves as panelist for AAAS event on AI and society

Maithilee Kunda was invited to be a speaker and panelist for a half-day symposium about AI and society hosted by the American Association for the Advancement of Science (AAAS) program of Dialogue on Science, Ethics, and Religion (DoSER) at a conference for members of the Religion News Association (RNA). The event was held in Nashville, TN.

Lab receives NSF grant

We have been awarded a research grant through the National Science Foundation’s Science of Learning program. The grant is titled, “Learning Visuospatial Reasoning Skills from Experience” and is led by Maithilee Kunda, in collaboration with Bethany Rittle-Johnson (Psychology & Human Development, Vanderbilt) and Linda Smith (Psychological & Brain Sciences, Indiana University).

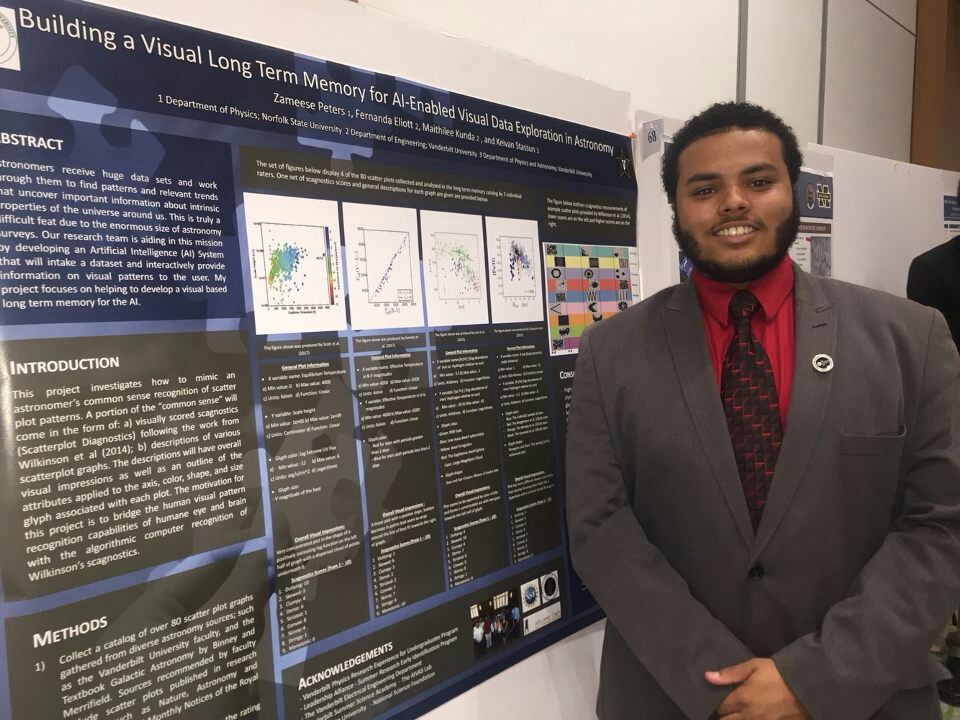

Zameese Peters presents research at 2017 Leadership Alliance National Symposium

Zameese Peters gave an oral presentation about his research on “Building a Visual Long Term Memory for Artificial Intelligence Enabled Data Exploration in Astronomy” at the 2017 Leadership Alliance National Symposium in Hartford, Connecticut.

Zameese also presented his work at the Vanderbilt Summer Science Academy symposium on August 3.

Congrats, Zameese!

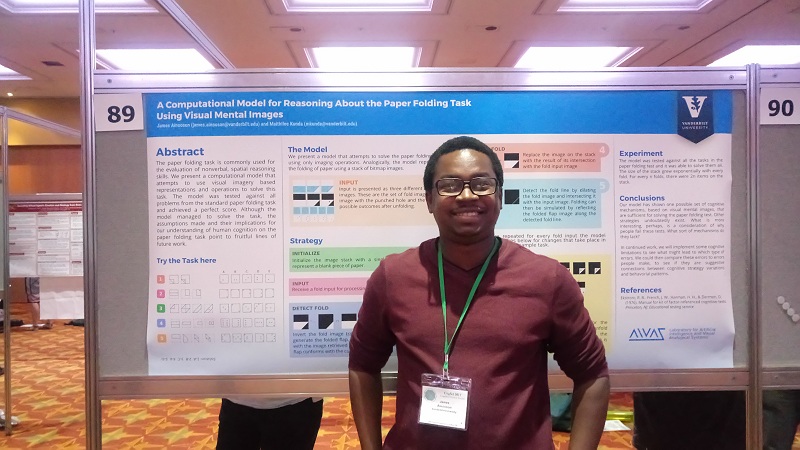

CogSci 2017: Fernanda Eliott and James Ainooson present posters

Fernanda and James each presented a poster at the Cognitive Science conference in London, UK:

Fernanda Eliott: Visual data exploration: How expert astronomers use flipbook-style visual approaches to understand new data

James Ainooson: A computational model for reasoning about the Paper Folding task using visual mental images

New Vanderbilt Center for Autism and Innovation

Maithilee Kunda is co-PI for the new Vanderbilt Center for Autism and Innovation, funded through a Vanderbilt Trans-Institutional Programs (TIPS) pilot award.

The website of the new center can be found at:

https://my.vanderbilt.edu/autismandinnovation/

See a writeup of AIVAS Lab contributions here:

Binula Illukpitiya wins second place in poster symposium

Binula placed second in the Vanderbilt summer research symposium for high school students, presenting a poster on his research on developing a new “Wearable Camera for Analysis of Human Visual Attention.”

Fernanda Eliott serves as panelist at STEM Think Tank and Conference

Fernanda Eliott served as a panelist for a panel on “Putting Computer Science to Use in the Industry and University Settings”, at the STEM Consortium’s 2017 STEM Think Tank and Conference in Nashville, TN.

Lab receives Vanderbilt Discovery Grant

With Maithilee Kunda as PI, the lab has received one of Vanderbilt’s Discovery Grants for 2017. The title of the project is, “New Explorations in Visual Object Recognition.” This research has been led by lab member Xiaohan Wang. The full list of Discovery Grant projects can be found here:

https://www.vanderbilt.edu/provost/occi/projects-dg-2017.php

Maithilee Kunda speaks at Egocentric Vision workshop

Maithilee Kunda was an invited speaker at the Workshop on Egocentric Vision: From Science to Real-World Applications, held in Bloomington, Indiana. The title of her talk was, “Looking and thinking: What wearable cameras can reveal about visual mental imagery.”

The full video of the talk can be seen here: https://youtu.be/JGNpGnbx6Is

Fernanda Eliott attends LATTICE symposium

Fernanda Eliott attended the inaugural LATTICE symposium in Seattle, WA, for “Launching Academics on the Tenure-Track: An Intentional Community in Engineering.”

Two papers accepted to CogSci 2017

We have had two papers accepted for poster presentation at this year’s CogSci conference, led by James Ainooson and Fernanda Eliott, respectively. The papers are:

- Ainooson, J., and Kunda, M. (2017). A computational model for reasoning about the Paper Folding task using visual mental images.

- Eliott, F. M., Stassun, K., and Kunda, M. (2017). Visual data exploration: How expert astronomers use flipbook-style visual approaches to understand new data.

Congrats to James and Fernanda!

Journal article published in Intelligence

Maithilee Kunda, together with colleagues Isabelle Soulières (University of Quebec at Montreal), Agata Rozga (Georgia Tech), and Ashok Goel (Georgia Tech) have published an article in the journal Intelligence, titled, “Error patterns on the Raven’s Standard Progressive Matrices Test.”

https://doi.org/10.1016/j.intell.2016.09.004

Below is the abstract of this paper:

Although many psychometric tests, like the Raven’s Progressive Matrices test, are commonly evaluated according to total score, additional variables can lend insight into the underlying cognitive processes of the test takers. We examine conceptual errors on the Raven’s Standard Progressive Matrices (SPM) test. We present a new, complete classification of error types on the SPM using a two-kind coding scheme. We also present a new method for analyzing group errors patterns on these kinds of tests. We present two examples of this analysis using our SPM error classification. The first looks at the errors made by an artificial intelligence model of Raven’s problem solving. The second example looks at the errors made by children and adults who are typically developing or have been diagnosed with autism. We close by discussing implications of this error classification and analysis method for the interpretation of SPM scores, towards a better understanding of the diversity of cognitive processes involved in Raven’s problem solving.

Noel Warford presents poster at Oberlin College

Noel Warford presented a poster on his research on “A New Test of Visual and Verbal Thinking” at the Oberlin Celebration of Undergraduate Research.

Maithilee Kunda speaks at Emtech MIT

Maithilee Kunda gave a short talk about her research in AI and visual thinking at the MIT Tech Review’s Emtech conference. A video of the talk can be seen here:

http://events.technologyreview.com/video/watch/maithilee-kunda-vanderbilt-innovator/

Partnering with Auburn on NSF INCLUDES project

Maithilee Kunda is a co-PI on a collaborative NSF INCLUDES project, led by Overtoun Jenda at Auburn University, titled, “South East Alliance for Persons with Disabilities in STEM (SEAPD-STEM).”

Maithilee Kunda named to MIT Tech Review’s list of 35 under 35

Maithilee Kunda was named to the MIT Tech Review’s annual list of 35 Innovators Under 35, in the category of “Visionary,” based on her research on visual-thinking in AI that is inspired by studies of visual thinking in autism.

For more information, see:

Gradient descent in the real world, a.k.a. lab mini golf

A great end-of-summer lab outing to Grand Old Golf. We saw examples of setting the learning rate too high, one-shot learning, optimization through quantum tunneling, and even deep networks!

New Vanderbilt course on Computational Mental Imagery

Maithilee Kunda will be teaching a new graduate course at Vanderbilt this fall called “Computational Mental Imagery.” Catalog description: Computational basis of visual mental imagery in human cognition and in artificial intelligence (AI) systems. Topics include knowledge representations and operations in mental imagery, role of mental imagery in problem solving, creativity, education, and scientific discovery, and variations in mental imagery in cognitive conditions such as autism.

Mohamed El-Banani presents poster at CogSci

Mohamed El-Banani is presenting a poster at this year’s Cognitive Science conference in Philadelphia this week. The title of the research paper is, “A Computational Exploration of Problem-Solving Strategies and Gaze Behaviors on the Block Design Task.” (The full paper can be found here.) Maithilee Kunda is also attending the conference.

Xiaohan Wang attends deep learning workshop

Xiaohan Wang is attending the 15th Neural Computation and Psychology Workshop in Philadelphia this week. The title of this year’s workshop is, “Contemporary Neural Network Models: Machine Learning, Artificial Intelligence, and Cognition.”

Brandt Plomaritis presents poster at Vanderbilt Summer Symposium

Brandt Plomaritis is presenting a research poster at the Vanderbilt Student Research Summer Symposium. The title of the poster is, “Random Walks as a Model of Exposure to Examples during Infant Learning.”

Maithilee Kunda and Julia Ting publish journal article in Advances in Cognitive Systems

A research paper, co-authored by Maithilee Kunda and Julia Ting, has just been published in the journal Advances in Cognitive Systems. The title of the article is, “Looking Around the Mind’s Eye: Attention-Based Access to Visual Search Templates in Working Memory.”

Summer kickoff lunch at the SATCO

The first official lab meeting of the summer is taken outdoors to the much-loved SATCO (San Antonio Taco Company).

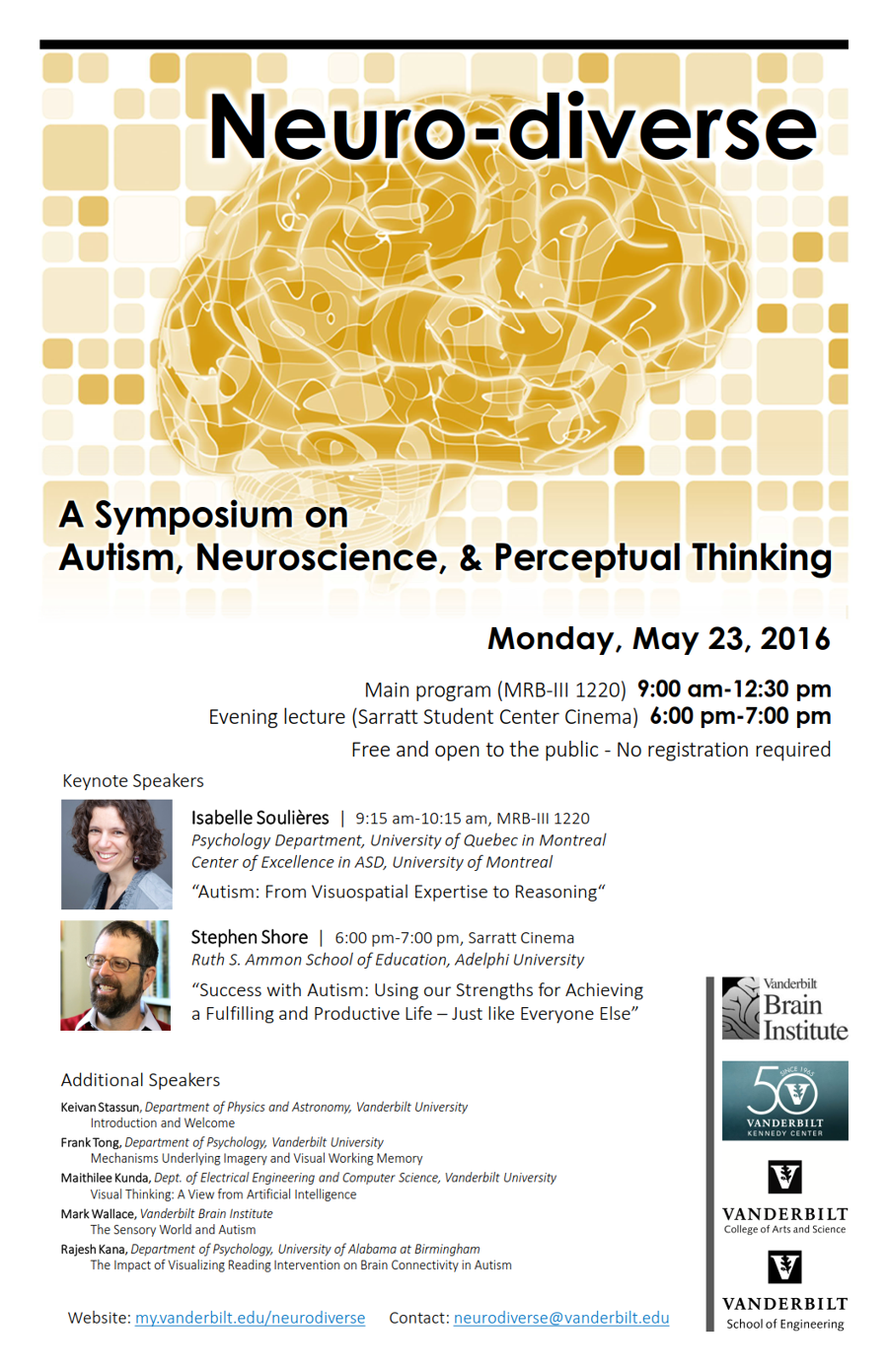

Maithilee Kunda organizes Neuro-Diverse Symposium

Maithilee Kunda is organizing an event called, “Neuro-diverse: A Symposium on Autism, Neuroscience, and Perceptual Thinking.” The symposium will be held today on the Vanderbilt campus. More information, including invited speakers, program, time, and location, can be found here.

Maithilee Kunda speaks at Eye’s Mind Conference

Maithilee Kunda is at the University of East Anglia, Norwich, UK, this week to speak at a conference called The Eye’s Mind: Visual Imagination, Neuroscience and the Humanities. The title of her talk is, “Visual imagination – A view from artificial intelligence.”

Paper accepted to CogSci 2016

Work by Maithilee Kunda, Mohamed El-Banani, and Jim Rehg has been accepted for poster presentation at CogSci 2016. The title of the paper is, “A Computational Exploration of Problem-Solving Strategies and Gaze Behaviors on the Block Design Task.”

Maithilee Kunda joins Vanderbilt as assistant professor

Maithilee Kunda has joined Vanderbilt as a new assistant professor of computer science and computer engineering in the EECS Department.