Home » News » ARMA Lab: Giving Surgeons a Sense of Touch

ARMA Lab: Giving Surgeons a Sense of Touch

Posted by anderc8 on Tuesday, March 14, 2017 in News, TIPs 2015.

Written by Department of Mechanical Engineering Ph.D. students Long Wang and Rashid Yasin

This blog is a follow-up to the previous article by Amy Wolf, “giving surgical robots a human touch“, which featured Dr. Nabil Simaan and his lab, Advanced Robotics and Mechanism Applications (ARMA). ARMA Lab, one of the labs under Vanderbilt Institute for Surgery and Engineering (VISE), is focused on advanced robotics research, with an emphasize on medical applications.

In Amy’s article, she mentioned an exciting direction that Dr. Simaan is leading our team – making surgical robots more intelligent as surgeons’ partners. Achieving such functionality may spare surgeons from low-level and tedious tasks like regulating safe interaction forces with the anatomy and allow them to focus on more important tasks like navigating tools to the proper locations to perform treatment e.g. ablation therapy. It may help surgeons transition from “good to great”, also see paper in Mechanical Engineering, 137(9).

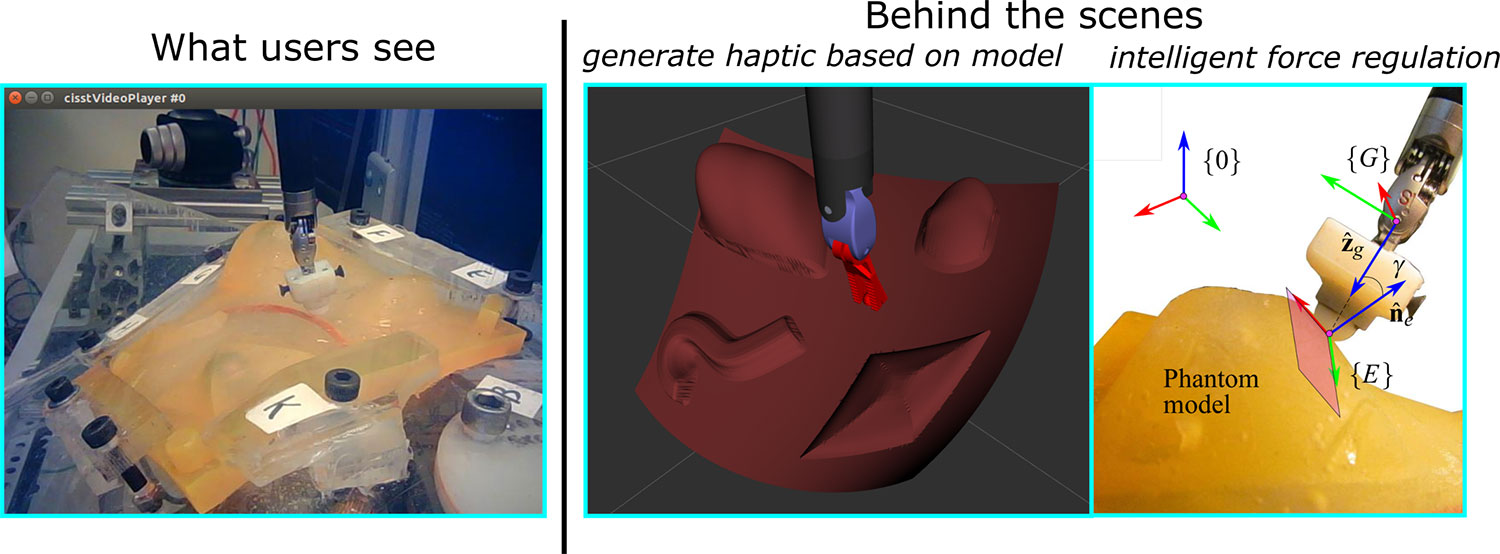

For one of our projects, we have demonstrated the concept of building intelligent robotic systems to assist surgeons by (i) regulating the interaction force with the anatomy and (ii) computing and rendering an assistive “virtual fixture” force on surgeons’ hand to reflect the location of the anatomy on the remote side in an effort to give surgeons the sense of touch they are often missing in robotic surgical procedures. This particular work is a collaboration between ARMA at Vanderbilt and LCSR at Johns Hopkins University, supervised by Dr. Nabil Simaan, Dr. Peter Kazanzides, and Dr. Russell Taylor.

A typical workflow in an operating room with robot-assisted technology is shown in the figure above (source: 2015 Intuitive Surgical, Inc.). Surgeons interact with master consoles while robot manipulators on the patient’s side (remote side) replicate their motions to execute surgical tasks. We use a similar arrangement to develop and test our framework.

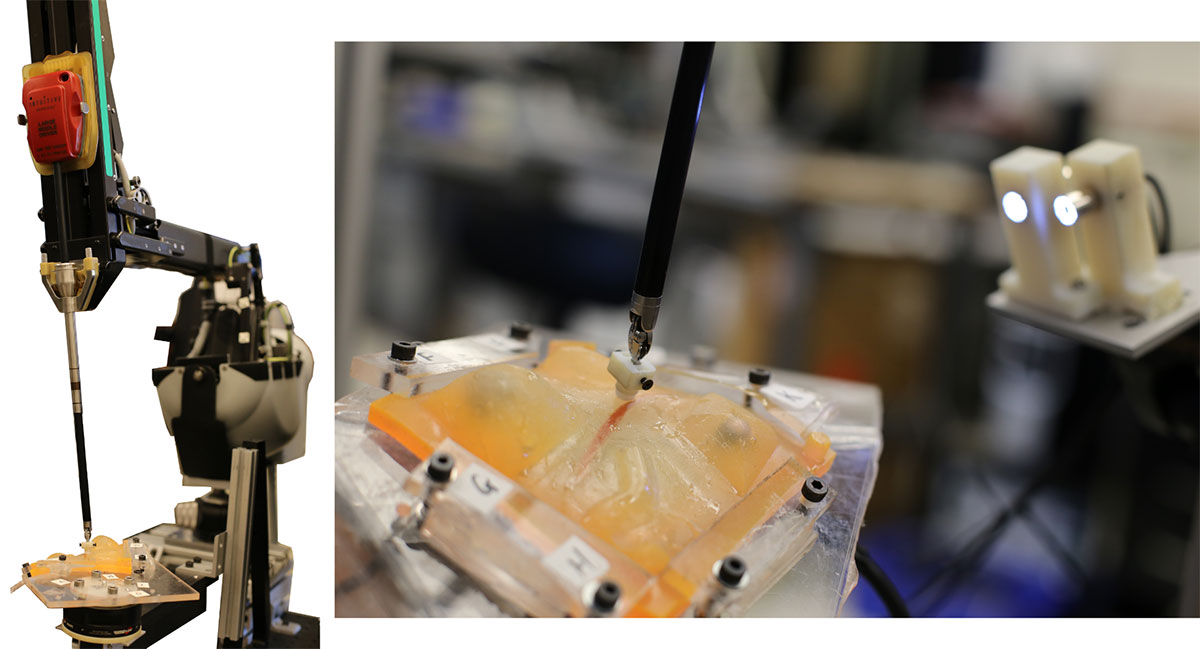

Patient Side

On the patient’s side (remote side) we use a da Vinci Research Kit (dVRK) Patient Side Manipulator (PSM) and a mockup silicone phantom model mounted on a force sensor. We have an inexpensive stereo camera system to provide stereo views to the user, to replace the Endoscopic Camera Manipulator (ECM) that is used in a real surgery.

As we would not be able to place a force sensor underneath an organ in a real surgical setting, the force sensor setup is not realistic, however, we are able to answer more fundamental research questions by using it to give us full information about the contact forces between the robot and the anatomy. In the future, this can be replaced by force sensing capabilities of the robot itself: there are promising researcher efforts by many, including our group, to provide force-sensing capabilities on surgical robots.

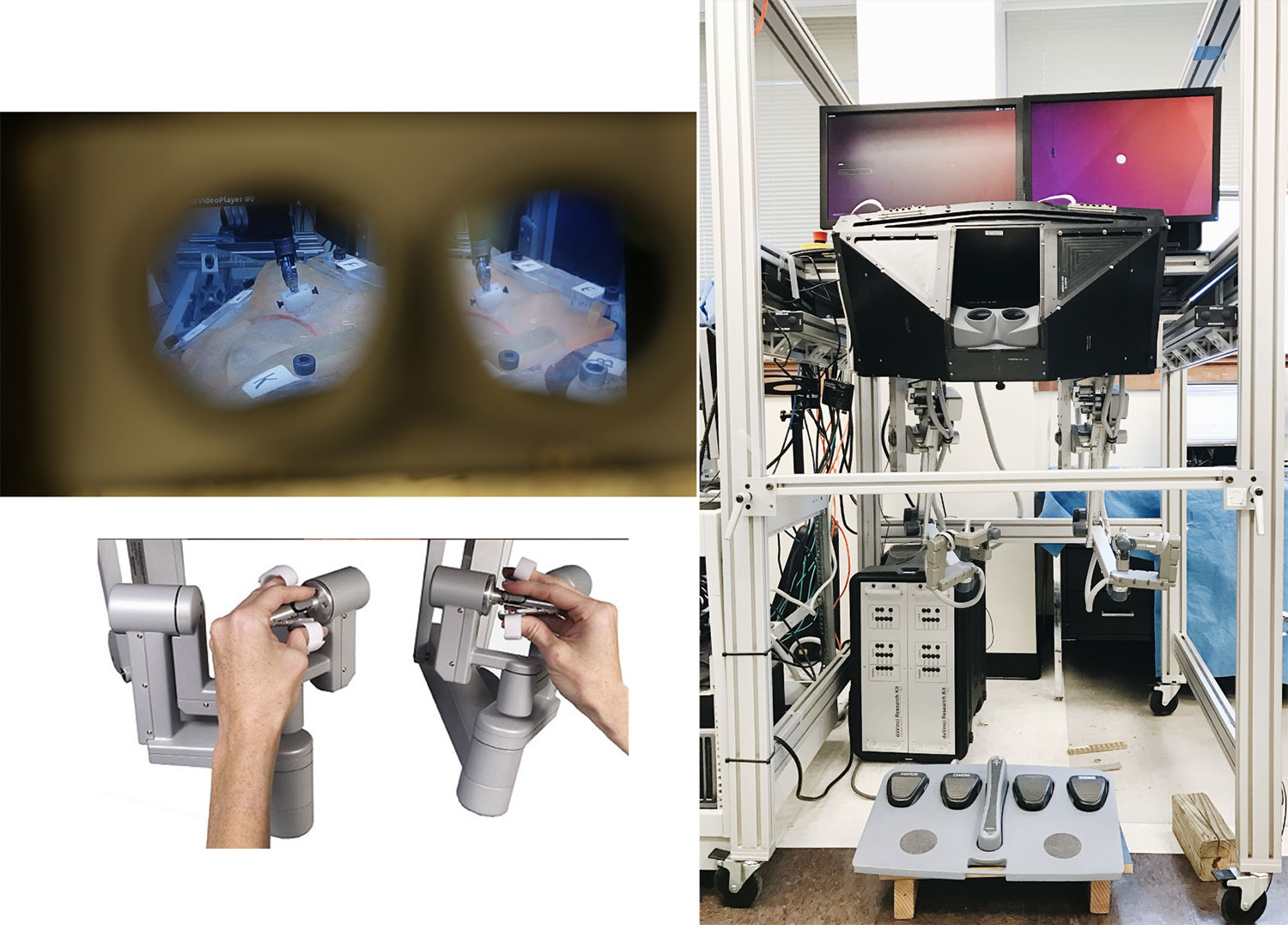

Surgeon (user) Console

For the surgeon (user) console side, we use a da Vinci Research Kit (dVRK) Master Tool Manipulator (MTM), including two master arms (only one is used for this demo) and a stereo viewer system that projects the two video feeds from the remote side. A standard foot pedal system is used for control.

A sense of touch & intelligent robot assistance

Surgeons (users) in this demo can see “inside” the anatomy through stereo video feeds from the remote side. But to give a sense of touch, a more complicated method is required. Simply sending the readings from the force sensor will be too noisy and will add a lag between the user movements and the force feedback. To combat these problems, a model representing the environment is updated behind the scenes and that model is used to generate haptic rendering forces based on a simple spring force model. Additionally, without any input by the user, the patient side robot manipulator regulates the interaction force with the environment, relying on its own hybrid force motion controller.

Demo in VISE Symposium

We believe the best way to improve a system is to ask different surgeons and users to try the system and get valuable feedback from them. The 5th Annual Surgery, Intervention, and Engineering Symposium sponsored by VISE was a great opportunity for us to do just that. We received a great deal of positive feedback from the users and also we found places where we can improve the system in our ongoing research plan.

Lab tour & demo for Harpeth Hall Winterim 2017

One very important mission of our research is to connect to and inspire younger generations. As the culmination of a 3-week “Winterim” teaching program at Harpeth Hall high school on medical robotics, lab members showed a group of 10 students around the ARMA lab. The lab tour included this demo along with other examples of what we are working on. These 9th and 10th graders were able to feel how the robot reacts to their commands and get a more physical understanding of what robots look like and how they are developed.

Approximately half of the class was thinking of a career in the fields of biomedical engineering or medicine. While we do not expect them to understand all the engineering details of how we create these demos, but we hope, that one day when some of these students become doctors, the features that we are building today will become commonly found in surgical suites across the globe.

This work has been accepted by Journal of Mechanisms and Robotics and will be shown in coming issues. The accepted manuscript can be found here.