Quality Matters – Research Design, Magnitude and Effect Sizes

The keystone of evidence-based practices (EPBs) is research. Through research, we are able to create a solid foundation for which an EPB can stand on. However, not all research is created equal. There are studies that are the cornerstone for other researchers, studies of such high-quality that they can be cited and reference with not qualms. Then there is research that is high-quality but may not meet all of the criteria for a EBP. Then there are studies that are missing key elements of a quality study and while they do show effects, those effects may be impacted by reliability. validity or implementation problems. Then there are the studies that are not high-quality. These studies leave many of the essential elements of a high-quality out and therefore the results cannot be validated.

The Council for Exceptional Children has developed quality indicators to help identify studies that examine interventions and their effects on children with disabilities. These indicators look to examine if a study is methodologically sound. This is another term used to describe the quality of research.

To access The Council for Exceptional Children’s Research Quality Indicators, click here.

Here is a simplified chart of the CEC quality indicators. We will revisit these indicators later to see what is required of studies to impact the status of evidence-based practices.

CEC Quality Indicators

(Adapted from the Council for Exceptional Children Standards for Evidence-Based Practices in Special Education, 2014| Indicator: | Indicator: | Additional Information: |

| 1.0 – Context and Setting | The study provides sufficient information regarding the critical features of the context or setting | School information, curriculum, geographical location, socioeconomic status, physical layout |

| 2.0 – Participants | The study provides sufficient information to identify the population of participants to which results may be generalized and to determine or confirm whether the participants demonstrated the disability or difficulty of focus. | Gender, age, race/ethnicity, socioeconomic status, language status, etc. Disability status and how determination for disability status was obtained |

| 3.0 – Intervention Agent | The study provides sufficient information regarding the critical features of the intervention agent. | Who is providing the intervention (teacher, researcher, etc.) and the information about the provider (age, educational background, etc.) How much training was provided to the those delivering intervention and what are the qualifications needed to implement intervention |

| 4.0 – Description of Practice | The study provides sufficient information regarding the critical features of the practice (intervention), such that the practice is clearly understood and can be reasonably replicated. | Describes intervention procedures, materials, dosage, prompts, proximity, etc. |

| 5.0 – Implementation Fidelity | The practice is implemented with fidelity. | Is there information about implementation fidelity (checklist, etc.) how often was fidelity looked at and how was it reported, was fidelity checked throughout the entire study, interventionist, participant, etc. |

| 6.0 – Internal Validity | The independent variable is under the control of experimenter. The study describes the services provided in control and comparison conditions and phases. The research design provides sufficient evidence that the independent variable causes change in the dependent variable or variables. Participants stayed with the study, so attrition is not a significant threat to internal validity. | Independent variable is manipulated with descriptions of baseline, control groups, interventions (duration, length, etc.) Describes I there was randomization and if no randomization was done, why and within what limits How many experimental effects were reported? Single subject – how many baseline points and descriptions on performance Threats to internal validity and how these apply to single-subject designs Was there attrition – did they lose any participants from the beginning to the end of the study Is attrition controlled for? |

| 7.0 – Outcomes Measures/Dependent Variables | Outcome measures are applied appropriately to gauge the effect of the practice on study outcomes. Outcome measures demonstrate adequate psychometrics. | Outcomes are socially important Descriptions of measurement of dependent variable Reporting of p values and effect sizes Frequency and outcome of measures – for single subject three points per phase change There is reliability information among observers Provides information about validity – content, social, criterion, etc. |

| 8.0 – Data Analysis | Data analysis is conducted appropriately. The study reports information on effect size. | Statistical techniques for comparison are reported and more that one effect size statistic is reported |

(Please note that all graphs and infographics are original.)

Experimental Designs

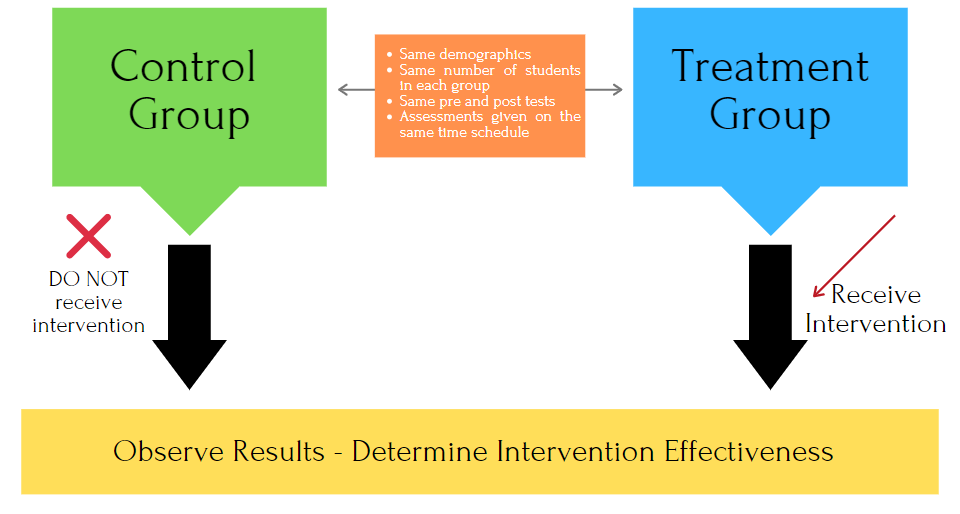

One of the key quality feature of EBP research is that studies employ an experimental design. An experimental design looks to compare results from two different groups to determine if an intervention was effective or not. The participants in these designs are either assigned to the treatment groups (getting the intervention) or to the control group (business as usual). Business as usual refers to students receiving their normal instruction in the classroom with no change applied. All of the participants have the same key features, for example all the participants are in the 2nd grade and have been identified as having a math disability. The participants are given the same pre-test assessments and the same post-test assessments on the same time schedule. Since one group received the treatment and one did not, the effects that the intervention has on participants can confidently be determined for the interventions effectiveness. Experimental designs are also quantitative which means the data can be measured and interpreted through different statistical manipulations.

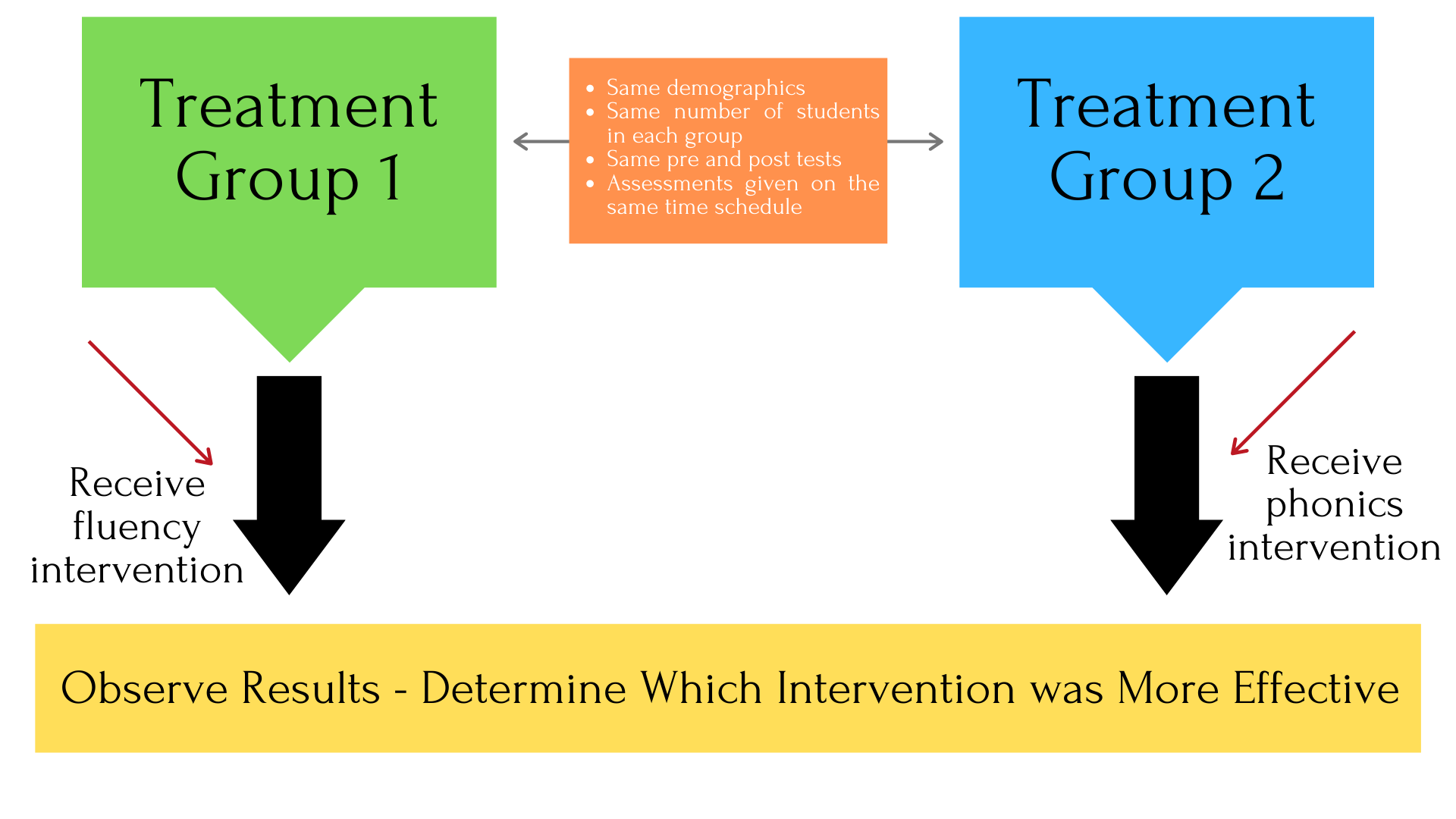

Quasi-Experimental Designs

There are studies that are quasi-experimental. These studies do not have randomization of their groups. They still have the same participants as pre and post assessments but their is no “business as usual” group. These studies look to see which of two interventions were more impactful on student outcomes. For example, a group of 3rd graders may be receiving two different reading interventions. One looks at phonics instruction only and the other at fluency only. The two groups receive the same intervention over the same number of weeks and at the end of intervention treatment, post tests are given. Based off the results, researchers can determine which intervention was more impactful.

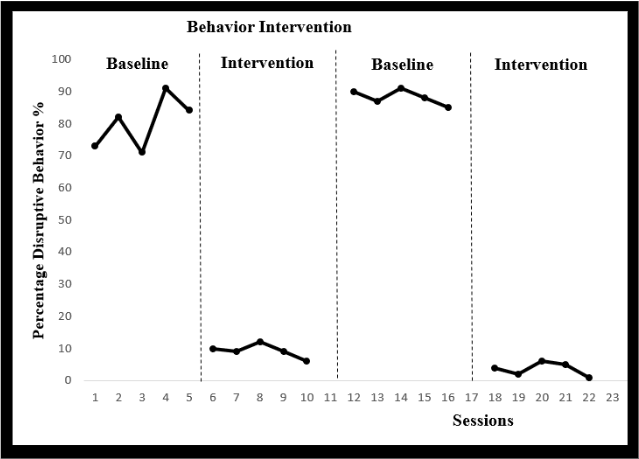

Single-Subject Designs

Single-subject designs are just as the name suggests…a single subject. This design type looks to examine the effects of an intervention on a single subject but the intervention could be looking at its effects on different behaviors or skills. You could have the same intervention (single-subject) across participants which looks at the interventions effects across participants of the same age, level or skill set. You could also have a single-subject designs that looks at alternating treatments and their effects on a participant. All of these different single subject designs demonstrate a interventions effects. Note that single-case design data looks most like data that teachers collect in their own classrooms.

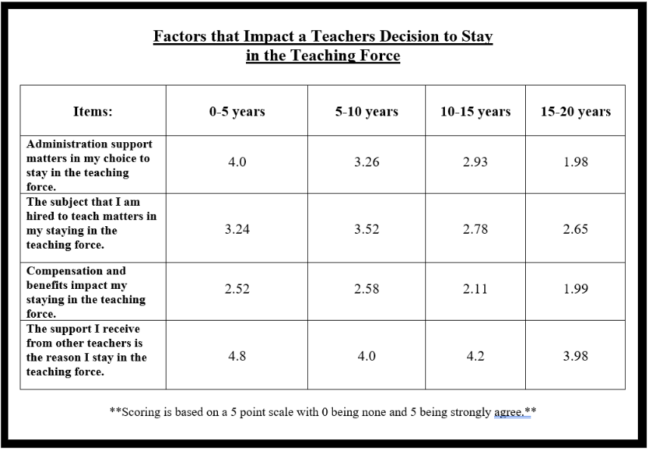

Qualitative Designs

Qualitative designs are different from quantitative in that the data is not measurable. Qualitative studies involve things like interviews, surveys and anecdotal information. These studies look at things like teachers’ perceptions of the learning environment, survey information about why a teacher left the classroom or descriptive information like effective teachers have these qualities. While the information from qualitative studies can be used for determining an evidence-based practice, they cannot be the only type of research in the body of evidence. This is because in these studies, there is no measurable data to compare and intervention and it’s effects on students or teachers.

What is magnitude and what are effect sizes? Magnitude refers to the amount of studies that show a strong, positive cause-and-effect relationship between an intervention and improved academic or behavioral outcomes. Basically the more studies you have that meet the quality indicators, the larger the magnitude of the effects of the intervention. A intervention with 3 quality studies versus an intervention with 13 studies means that the intervention with 13 studies has a greater magnitude for effectiveness.

Magnitude is also applied to effect sizes. Effect sizes report statistical information about how impactful an intervention was on student performance. For example an effect size of .15 demonstrates that there was some effectiveness to the intervention but not enough to be statistically significant. However an effect size of 1.5 shows a greater amount of effectiveness of the intervention on student outcomes. Rule of thumb for effect sizes is the closer you get to 1.0, the more statistically significant the effects. Oh and remember too that the closer to zero or negative numbers show a negative outcome for the intervention on student outcomes. The larger the effect size, the greater the magnitude of its impact.

So what do these research designs have to do with a practice being evidence-based? Based on the rigor and quality of each of the design types, there is a minimum numbers of quality studies needed to identify a practice or intervention as evidence-based.

Here is the general guidelines that are used in special education:

General Guidelines for Evidence -Based Practices in Special Education

Adapted from Cook, Farley, and Cook, 2012| Design Type: | Guidelines: |

| Group Design and quasi-experimental | At least two high-quality or four acceptable-quality studies must support the practice as effective, with a weighted effect size across studies significantly greater than zero. High-quality studies must meet criteria in at least three of the following areas: description of participants, intervention and comparison condition, outcome measures, data analysis, and at least four of eight “desirable” indicators, such as attrition rate (Gersten et al., 2005). |

| Single Subject | Five or more high-quality studies with 20 or more participants that meet criteria related to participants and setting; independent and dependent variables; baseline; and internal, external, and social validity must support the practice as effective (Horner et al., 2005). |

| Qualitative | Although qualitative studies are useful for many purposes, they are not designed to determine whether a practice causes improved student outcomes (McDuffie & Scruggs, 2008). |

Now that we have looked at design types, magnitude and effect sizes, let’s look at some resources for selecting Evidence-Based Practices.

References:

Council for Exceptional Children (CEC). (2014). Council for Exceptional Children standards for evidence-based practices in special education. Retrieved from http://www.cec.sped.org/~/media/Files/Standards/Evidence%20based%20Practices%20and%20Practice/CECs%20EBP%20Standards.pdf

Gersten, R., Fuchs, L. S., Compton, D., Coyne, M., Greenwood, C., & Innocenti, M. S. (2005). Quality indicators for group experimental and quasi-experimental research in special education. Exceptional Children, 71, 149–164.

Horner, R. H., Carr, E. G., Halle, J., McGee, G., Odom, S., & Wolery, M. (2005). The use of single-subject research to identify evidence-based practice in special education. Exceptional Children, 71, 165–179.

Kratochwill, T. R., Hitchcock, J. H., Horner, R. H., Levin, J. R., Odom, S. L., Rindskopf, D. M., & Shadish, W. R. (2013). Single-case intervention research design standards. Remedial and Special Education, 34, 26–38.

Torres, C., Farley, C. A., & Cook, B. G. (2012). A special educator’s guide to successfully implementing evidence-based practices. TEACHING Exceptional Children, 45(1), 64–73.