Simultaneous Truth and Performance Level Estimation with Incomplete, Over-complete, and Ancillary Data

A. Landman, H. Wan, J. Bogovic, and J. L. Prince. “Simultaneous Truth and Performance Level Estimation with Incomplete, Over-complete, and Ancillary Data”, In Proceedings of the SPIE Medical Imaging Conference. San Diego, CA, February 2010 (Oral Presentation) PMC2917119

Full Text:https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2917119/

Abstract

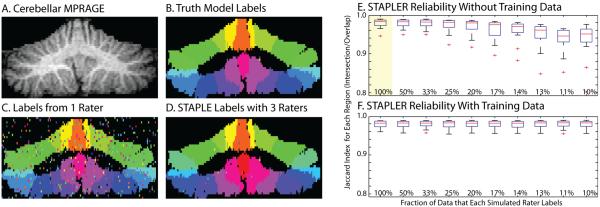

Image labeling and parcellation are critical tasks for the assessment of volumetric and morphometric features in medical imaging data. The process of image labeling is inherently error prone as images are corrupted by noise and artifact. Even expert interpretations are subject to subjectivity and the precision of the individual raters. Hence, all labels must be considered imperfect with some degree of inherent variability. One may seek multiple independent assessments to both reduce this variability as well as quantify the degree of uncertainty. Existing techniques exploit maximum a posteriori statistics to combine data from multiple raters. A current limitation with these approaches is that they require each rater to generate a complete dataset, which is often impossible given both human foibles and the typical turnover rate of raters in a research or clinical environment. Herein, we propose a robust set of extensions that allow for missing data, account for repeated label sets, and utilize training/catch trial data. With these extensions, numerous raters can label small, overlapping portions of a large dataset, and rater heterogeneity can be robustly controlled while simultaneously estimating a single, reliable label set and characterizing uncertainty. The proposed approach enables parallel processing of labeling tasks and reduces the otherwise detrimental impact of rater unavailability.