Distributed Deep Learning Across Multisite Datasets for Generalized CT Hemorrhage Segmentation

Remedios, S. W., Roy, S., Bermudez, C., Patel, M. B., Butman, J. A., Landman, B. A., & Pham, D. L. (2019). Distributed Deep Learning Across Multi‐site Datasets for Generalized CT Hemorrhage Segmentation. Medical physics.

Full Text: Pubmed Link

Abstract

Purpose: As deep neural networks achieve more success in the wide field of computer vision, greater emphasis is being placed on the generalizations of these models for production deployment. With sufficiently large training datasets, models can typically avoid overfitting their data; however, for medical imaging it is often difficult to obtain enough data from a single site. Sharing data between institutions is also frequently nonviable or prohibited due to security measures and research compliance constraints, enforced to guard protected health information (PHI) and patient anonymity.

Methods: In this paper, we implement cyclic weight transfer with independent datasets from multiple geographically disparate sites without compromising PHI. We compare results between single-site learning (SSL) and multisite learning (MSL) models on testing data drawn from each of the training sites as well as two other institutions.

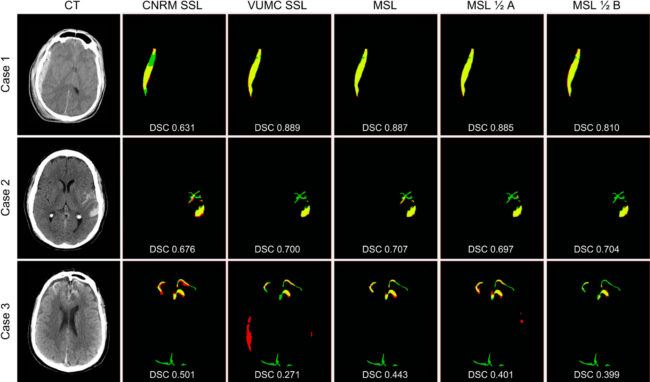

Results: The MSL model attains an average dice similarity coefficient (DSC) of 0.690 on the holdout institution datasets with a volume correlation of 0.914, respectively corresponding to a 7% and 5% statistically significant improvement over the average of both SSL models, which attained an average DSC of 0.646 and average correlation of 0.871.

Conclusions: We show that a neural network can be efficiently trained on data from two physically remote sites without consolidating patient data to a single location. The resulting network improves model generalization and achieves higher average DSCs on external datasets than neural networks trained on data from a single source.

Results of automatic segmentations compared with manual gold standard for each training method for three cases representing a range of DSCs (top quartile — Case 1 (VUMC dataset), median — Case 2 (CNRM dataset), and bottom quartile — Case 3(VCU dataset)). For each case, manual segmentation only (FN) is green, automatic segmentation only (FP) is red, and the overlap of manual and automatic segmentations (TP) is yellow. Black is TN. Corresponding DSCs are overlaid. As we aim to show that MSL generalizes across different institutions on average, we encourage consideration of DSCs as a whole rather than the individual cases.