Distortion correction of diffusion weighted MRI without reverse phase-encoding scans or field-maps

Kurt G Schilling*, Justin Blaber*, Colin Hansen, Leon Cai, Baxter Rogers, Adam W Anderson, Seth A Smith, Praitayini Kanakaraj, Tonia Rex, Susan M. Resnick, Andrea T. Shafer, Laurie Cutting, Neil Woodward, David Zald, Bennett A Landman.“ Distortion correction of diffusion weighted MRI without reverse phase-encoding scans or field-maps ”. PLoS ONE 15(7): e0236418. https://doi.org/10.1371/journal.pone.0236418

Full text: https: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0236418

Abstract

Diffusion magnetic resonance images may suffer from geometric distortions due to susceptibility induced off resonance fields, which cause geometric mismatch with anatomical images and ultimately affect subsequent quantification of microstructural or connectivity indices. State-of-the art diffusion distortion correction methods typically require data acquired with reverse phase encoding directions, resulting in varying magnitudes and orientations of distortion, which allow estimation of an undistorted volume. Alternatively, additional field maps acquisitions can be used along with sequence information to determine warping fields. However, not all imaging protocols include these additional scans and cannot take advantage of state-of-the art distortion correction. To avoid additional acquisitions, structural MRI (undistorted scans) can be used as registration targets for intensity driven correction. In this study, we aim to (1) enable susceptibility distortion correction with historical and/or limited diffusion datasets that do not include specific sequences for distortion correction and (2) avoid the computationally intensive registration procedure typically required for distortion correction using structural scans. To achieve these aims, we use deep learning (3D U-nets) to synthesize an undistorted b0 image that matches geometry of structural T1w images and intensity contrasts from diffusion images. Importantly, the training dataset is heterogenous, consisting of varying acquisitions of both structural and diffusion. We apply our approach to a withheld test set and show that distortions are successfully corrected after processing. We quantitatively evaluate the proposed distortion correction and intensity-based registration against state-of-the-art distortion correction (FSL topup). The results illustrate that the proposed pipeline results in b0 images that are geometrically similar to non-distorted structural images, and more closely match state-of-the-art correction with additional acquisitions. In addition, we show generalizability of the proposed approach to datasets that were not in the original training / validation / testing datasets. These datasets included varying populations, contrasts, resolutions, and magnitudes and orientations of distortion and show efficacious distortion correction. The method is available as a Singularity container, source code, and an executable trained model to facilitate evaluation.

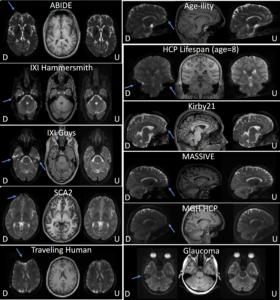

External validation of corrected b0’s after applying the synthesized b0 distortion correction pipeline with data from open-sourced studies. The distorted (“D”) and undistorted (“U”) b0 images are shown alongside T1 images. In all cases, effective distortion correction is visually apparent (distortions indicated by arrows).