Characterizing Low-cost Registration for Photographic Images to Computed Tomography

Michael E. Kim, Ho Hin Lee, Karthik Ramadass, Chenyu Gao, Katherine Van Schaik, Eric Tkaczyk, Jeffrey Spraggins, Daniel C. Moyer, Bennett A. Landman

Biorxiv Link: https://www.biorxiv.org/content/10.1101/2023.09.22.558989v1

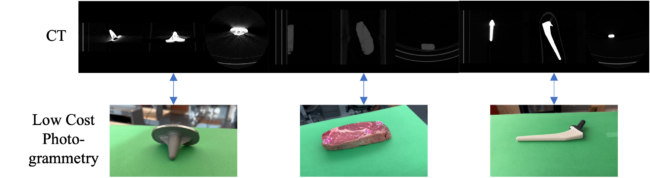

Figure 1. We map surfaces of phantom objects obtained from low-cost photogrammetry to surfaces obtained from volumetric CT scans of the phantoms in order to examine if similar techniques could be applied to mapping of histology data to volumetric CT data. Knee (left) and hip (right) implants are static objects with reflective and non-reflective surfaces respectively whose surfaces should be easily mappable. Chuck-eye steak (center) is a moderately deformable phantom that also provides a slightly reflective surface, which should be more similar to biological tissue.

Abstract:

Mapping information from photographic images to volumetric medical imaging scans is essential for linking spaces with physical environments, such as in image-guided surgery. Current methods of accurate photographic image to computed tomography (CT) image mapping can be computationally intensive and/or require specialized hardware. For general purpose 3-D mapping of bulk specimens in histological processing, a cost-effective solution is necessary. Here, we compare the integration of a commercial 3-D camera and cell phone imaging with a surface registration pipeline. Using surgical implants and chuck-eye steak as phantom tests, we obtain 3-D CT reconstruction and sets of photographic images from two sources: Canfield Imaging’s H1 camera and an iPhone 14 Pro. We perform surface reconstruction from the photographic images using commercial tools and open-source code for Neural Radiance Fields (NeRF) respectively. We complete surface registration of the reconstructed surfaces with the iterative closest point (ICP) method. Manually placed landmarks were identified at three locations on each of the surfaces. Registration of the Canfield surfaces for three objects yields landmark distance errors of 1.747, 3.932, and 1.692 mm, while registration of the respective iPhone camera surfaces yields errors of 1.222, 2.061, and 5.155 mm. Photographic imaging of an organ sample prior to tissue sectioning provides a low-cost alternative to establish correspondence between histological samples and 3-D anatomical samples.

Submitted to SPIE: Medical Imaging 2024

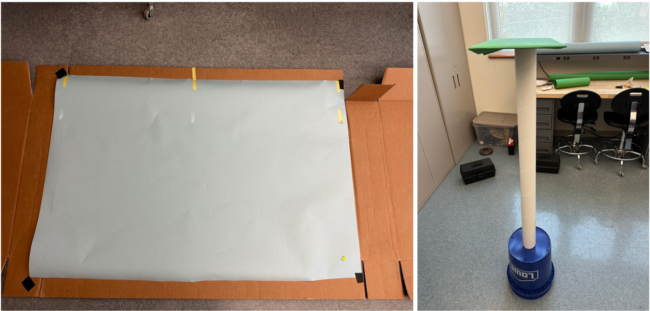

Figure 2. Setup to obtain the photogrammetry from the H1 camera (left) requires only a flat surface with light blue background paper and enough space to take a photo with the camera. Setup for the iPhone videos (right) uses a green background platform underneath the object with enough space to circle around the object completely, although any color background paper can be used as long as it is easily differentiable from the surface of interest and not very reflective. The platform was raised to allow for easier movement around the setup while holding the iPhone. Both setups use only ambient and fluorescent lighting, were free of nearby clutter and objects, and are low-cost with minimal preparation.

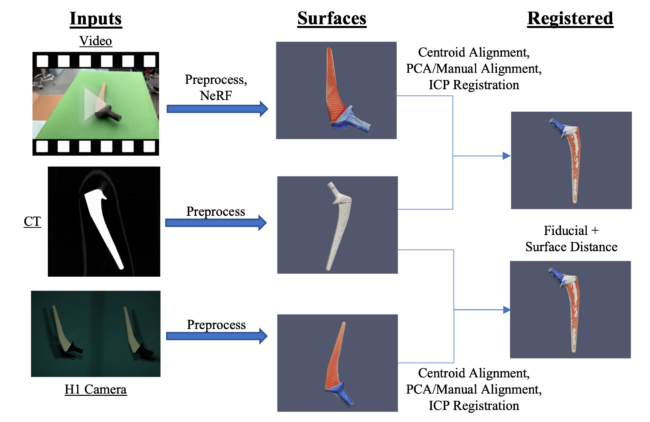

Figure 3. CT scans of objects were segmented and processed to obtain a 3-D surface. Photogrammetry surfaces of the same objects were obtained through subsampled 1080p video input from an iPhone 14 Pro and binocular images from a VECTRA H1 camera. After an initial centroid alignment and PCA alignment with manual inspection, the photogrammetry surfaces were registered via the ICP algorithm. Alignment of the surfaces was assessed from fiducial registration error and surface distances.

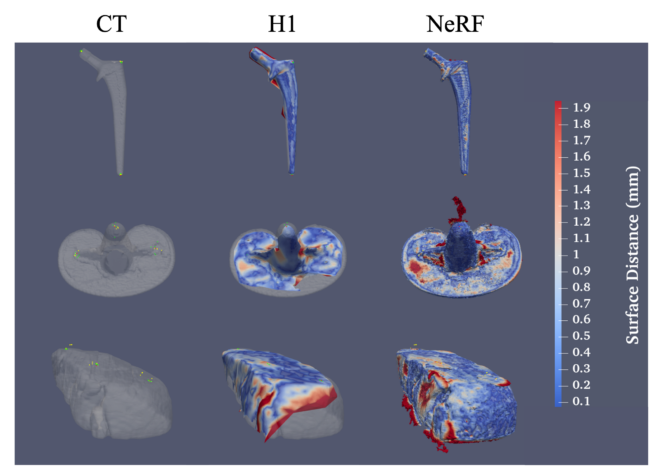

Figure 4. NeRF surfaces capture much finer detail and sharper edges than surfaces from the VECTRA H1 camera but are much noisier and more susceptible to artifacts from light reflections. (Top) From left to right, a surface obtained from a CT of a hip implant with fiducials from the CT surface and both H1 and NeRF surface fiducials overlaid, an H1 surface of a hip implant registered to the CT surface, and a NeRF surface registered to the CT surface. Red markers indicate CT fiducials, green indicate H1 surface fiducials, and yellow indicate NeRF surface fiducials. Similar results are shown for the knee implant (center) and the chuck-eye steak (bottom). Surface colors indicate the surface distance of each point on the photogrammetry surface to the CT surface.